There are many fun things we can do with the fleet server & elastic-agent

To understand how to edit and change settings to do fun things with fleet server, first you have to understand how fleet server works with kibana & elasticsearch and elastic-agent.

Fleet server itself is elastic-agent that runs in a server mode. It needs to communicate with both kibana and elasticsearch as well as elastic-agent. It uses elasticsearch as its config manager to store configurations so that it can configure & keep track of elastic-agents that is registered against it. Kibana is used to configure fleet server, all API calls for fleet is ran to the kibana endpoint.

When you register an elastic-agent, you register the elastic-agent against a fleet server. If your fleet server is using self signed certificate you must specify the CA or add --insecure to register your agent against it. Once the elastic-agent is registered, the fleet server sends configurations (state.yml) onto elastic-agent and the agent configures various beats/integrations/modules/endpoint agents using the configurations sent by fleet server.

So what kind of fun things can we do?

-

We saw in https://www.gooksu.com/2022/05/fleet-server-with-logstash-output-elastic-agent/ that you can configure logstash as an output for fleet-server. This feature is still beta however it will lead to ingestion improvements once its GAed

-

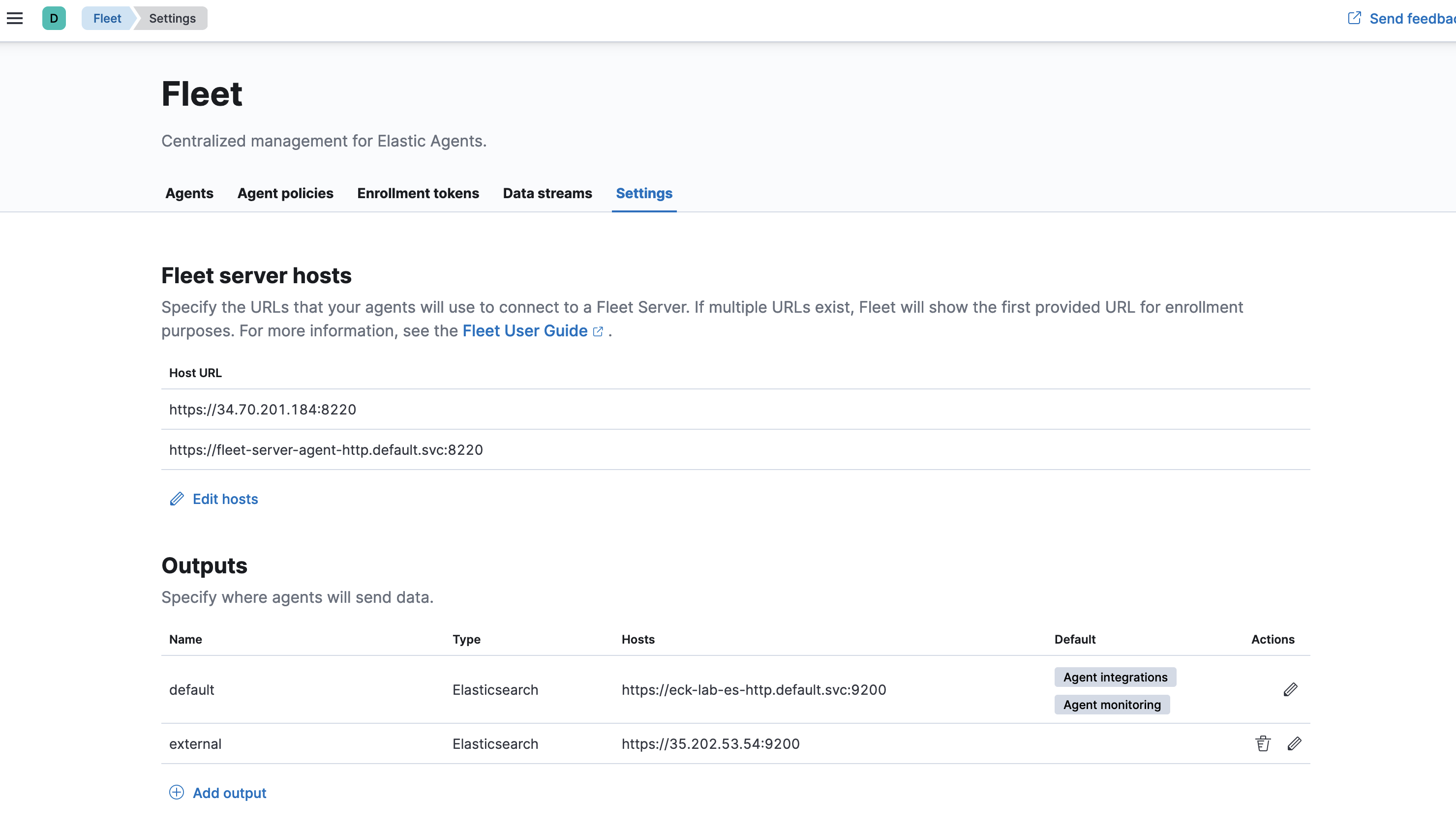

Starting wtih 8.2 you can configure multiple

outputsin fleet settings which can you tie into differentagent policies– so lets say some policy you send your data directly to elasticsearch, others you send it via logstash, and when you mix logstash into the mix you can start sending the data to other deployments! This feature really makes things very robust where you can do a lot. – If in the future if there is a way to add/change api keys or specify username/password for ES endpoints you can even natively send data to other deployments with ease. -

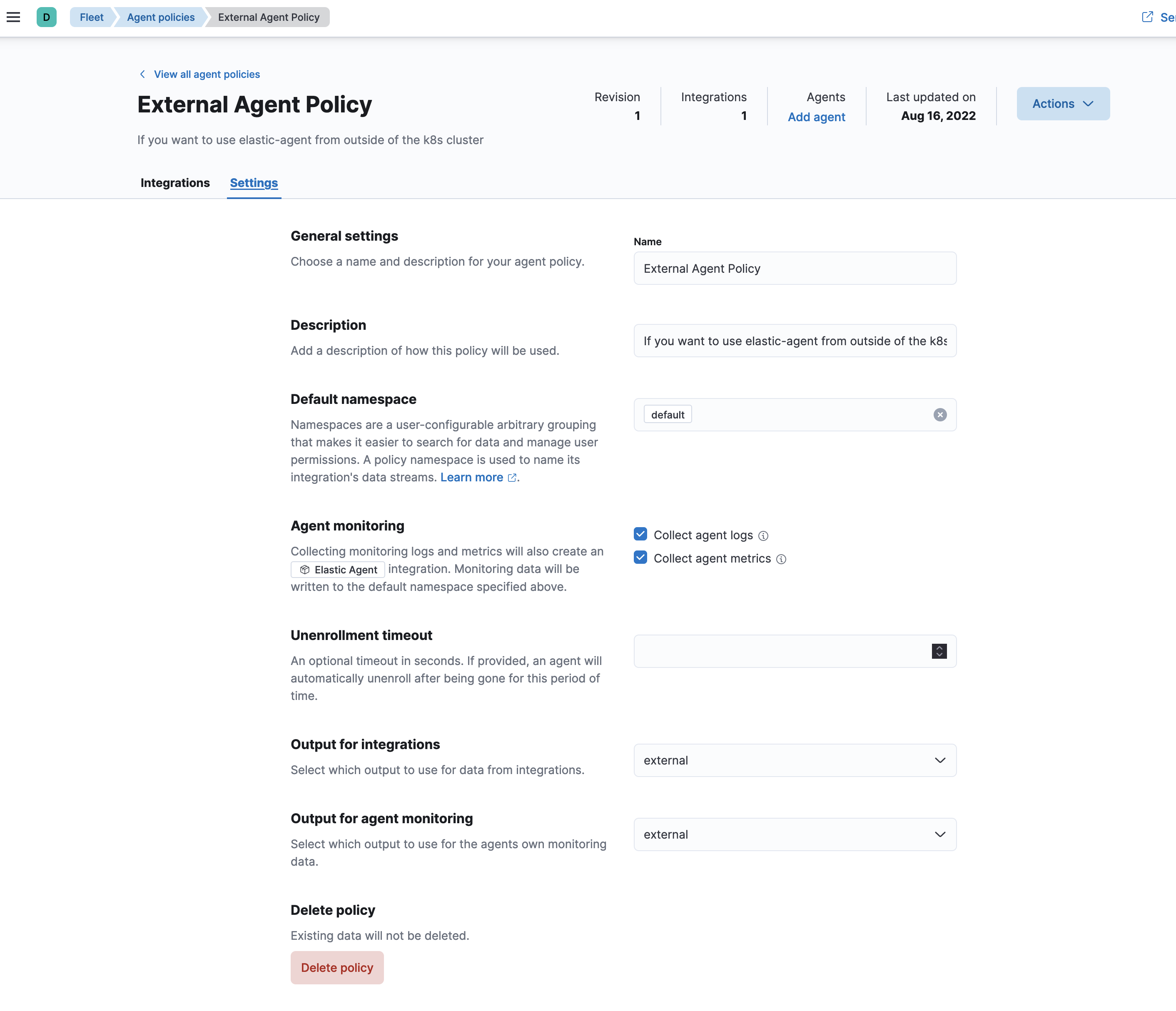

Related to above you can configure your

Agent policywith an output for yourintegrationsandagent monitoringseperately! So you can send your monitoring data to another cluster vs your integrations. -

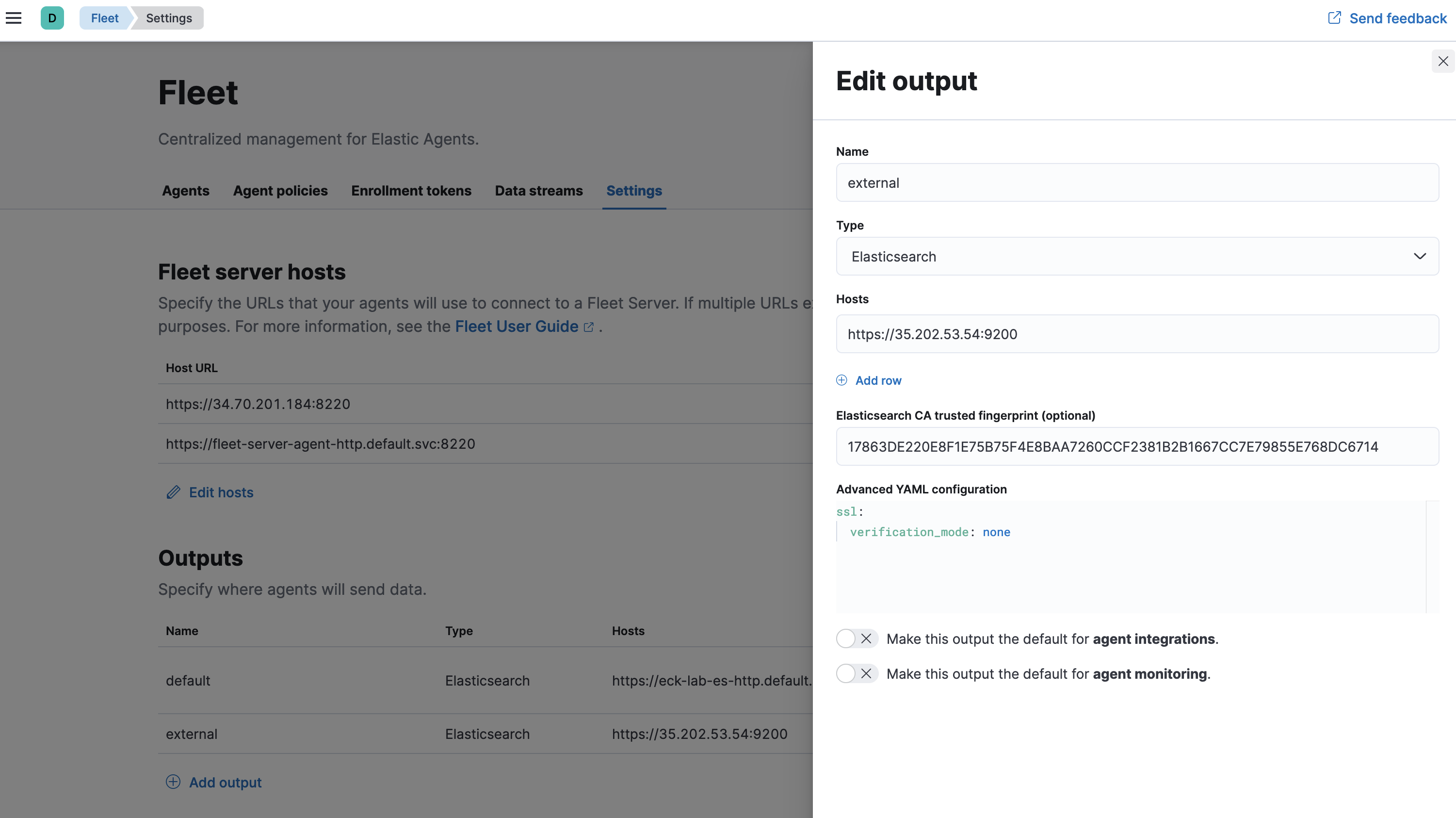

Fingerprinting. Before fingerprinting you either had to stage your CA onto each endpoint ahead of time or paste the contents of your CA into the

Advanced YAML Optionsbut starting with 8.0 you can now fingerprint your CA and add the fingerprint. The endpoints will read the fingerprint and add it to its trusted CA chain. The fingerprint must be in sha256 format and formatted without the:. For my scripts i useopenssl x509 -fingerprint -sha256 -noout -in CA.crt | awk -F"=" {' print $2 '} | sed s/://g -

my deploy-eck.sh script was updated today for fleet 8.2+ so that it configures an output for the LB endpoint for fleet server as well as LB endpoint for the ES cluster so that you can add

elastic-agentsfrom outside of the k8s cluster! It even configures the agent policy that should be used for your external endpoints.

$ ./deploy-eck.sh cleanup

********** Cleaning up **********

[DEBUG] DELETING Resources for: /Users/jlim/eckstack/license.yaml

[DEBUG] DELETING Resources for: /Users/jlim/eckstack/operator.yaml

[DEBUG] DELETING Resources for: /Users/jlim/eckstack/crds.yaml

[DEBUG] All cleanedup

~ > ./deploy-eck.sh fleet 8.2.0 2.3.0

[DEBUG] ECK 2.3.0 version validated.

[DEBUG] This might take a while. In another window you can watch -n2 kubectl get all or kubectl get events -w to watch the stack being stood up

********** Deploying ECK 2.3.0 OPERATOR **************

[DEBUG] ECK 2.3.0 downloading crds: crds.yaml

[DEBUG] ECK 2.3.0 downloading operator: operator.yaml

[DEBUG] ECK Operator is starting. Checking again in 20 seconds. If the operator does not goto Running status in few minutes something is wrong. CTRL-C please

[DEBUG] ECK 2.3.0 OPERATOR is HEALTHY

NAME READY STATUS RESTARTS AGE

pod/elastic-operator-0 1/1 Running 0 23s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elastic-webhook-server ClusterIP 10.88.1.132 <none> 443/TCP 23s

NAME READY AGE

statefulset.apps/elastic-operator 1/1 23s

[DEBUG] ECK 2.3.0 Creating license.yaml

[DEBUG] ECK 2.3.0 Applying trial license

********** Deploying ECK 2.3.0 STACK 8.2.0 CLUSTER eck-lab **************

[DEBUG] ECK 2.3.0 STACK 8.2.0 CLUSTER eck-lab Creating elasticsearch.yaml

[DEBUG] ECK 2.3.0 STACK 8.2.0 CLUSTER eck-lab Starting elasticsearch cluster.

[DEBUG] elasticsearch is starting. Checking again in 20 seconds. If this does not finish in few minutes something is wrong. CTRL-C please

[DEBUG] elasticsearch is starting. Checking again in 20 seconds. If this does not finish in few minutes something is wrong. CTRL-C please

[DEBUG] elasticsearch is starting. Checking again in 20 seconds. If this does not finish in few minutes something is wrong. CTRL-C please

[DEBUG] elasticsearch is starting. Checking again in 20 seconds. If this does not finish in few minutes something is wrong. CTRL-C please

[DEBUG] elasticsearch is starting. Checking again in 20 seconds. If this does not finish in few minutes something is wrong. CTRL-C please

[DEBUG] ECK 2.3.0 STACK 8.2.0 is HEALTHY

NAME HEALTH NODES VERSION PHASE AGE

eck-lab green 3 8.2.0 Ready 107s

[DEBUG] ECK 2.3.0 STACK 8.2.0 CLUSTER eck-lab Creating kibana.yaml

[DEBUG] ECK 2.3.0 STACK 8.2.0 CLUSTER eck-lab Starting kibana.

[DEBUG] kibana is starting. Checking again in 20 seconds. If this does not finish in few minutes something is wrong. CTRL-C please

[DEBUG] kibana is starting. Checking again in 20 seconds. If this does not finish in few minutes something is wrong. CTRL-C please

[DEBUG] kibana is starting. Checking again in 20 seconds. If this does not finish in few minutes something is wrong. CTRL-C please

[DEBUG] ECK 2.3.0 STACK 8.2.0 is HEALTHY

NAME HEALTH NODES VERSION AGE

eck-lab green 1 8.2.0 66s

[DEBUG] Grabbing elastic password for eck-lab: C8282E0wCq84rhCu2NNWKp66

[DEBUG] Grabbing elasticsearch endpoint for eck-lab: https://35.202.53.54:9200

[DEBUG] Grabbing kibana endpoint for eck-lab: https://34.72.32.122:5601

********** Deploying ECK 2.3.0 STACK 8.2.0 Fleet Server & elastic-agent **************

[DEBUG] Patching kibana to set fleet settings

[DEBUG] Sleeping for 60 seconds to wait for kibana to be updated with the patch

..............................

[DEBUG] Creating fleet.yaml

[DEBUG] STACK VERSION: 8.2.0 Starting fleet-server & elastic-agents.

[DEBUG] agent is starting. Checking again in 20 seconds. If this does not finish in few minutes something is wrong. CTRL-C please

[DEBUG] agent is starting. Checking again in 20 seconds. If this does not finish in few minutes something is wrong. CTRL-C please

[DEBUG] ECK 2.3.0 STACK 8.2.0 is HEALTHY

NAME HEALTH AVAILABLE EXPECTED VERSION AGE

elastic-agent green 3 3 8.2.0 46s

fleet-server green 1 1 8.2.0 46s

[DEBUG] Grabbing Fleet Server endpoint (external): https://34.70.201.184:8220

[DEBUG] Waiting 30 seconds for fleet server to calm down to set the external output

...............

[DEBUG] Output: external created. You can use this output for elastic-agent from outside of k8s cluster.

[DEBUG] Please create a new agent policy using the external output if you want to use elastic-agent from outside of k8s cluster.

[DEBUG] Please use https://34.70.201.184:8220 with --insecure to register your elastic-agent if you are coming from outside of k8s cluster.

[SUMMARY] ECK 2.3.0 STACK 8.2.0

NAME READY STATUS RESTARTS AGE

pod/eck-lab-es-default-0 1/1 Running 0 5m55s

pod/eck-lab-es-default-1 1/1 Running 0 5m55s

pod/eck-lab-es-default-2 1/1 Running 0 5m55s

pod/eck-lab-kb-76c47d84bf-2rc4d 1/1 Running 0 2m59s

pod/elastic-agent-agent-6szlx 1/1 Running 2 (66s ago) 116s

pod/elastic-agent-agent-xlzbd 1/1 Running 2 (74s ago) 116s

pod/elastic-agent-agent-xv69b 1/1 Running 1 (71s ago) 116s

pod/fleet-server-agent-7c7b8865cd-wdd5v 1/1 Running 0 89s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/eck-lab-es-default ClusterIP None <none> 9200/TCP 5m55s

service/eck-lab-es-http LoadBalancer 10.88.6.10 35.202.53.54 9200:32195/TCP 5m57s

service/eck-lab-es-internal-http ClusterIP 10.88.4.122 <none> 9200/TCP 5m57s

service/eck-lab-es-transport ClusterIP None <none> 9300/TCP 5m57s

service/eck-lab-kb-http LoadBalancer 10.88.2.120 34.72.32.122 5601:32611/TCP 4m8s

service/fleet-server-agent-http LoadBalancer 10.88.11.97 34.70.201.184 8220:30498/TCP 118s

service/kubernetes ClusterIP 10.88.0.1 <none> 443/TCP 9h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/elastic-agent-agent 3 3 3 3 3 <none> 116s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/eck-lab-kb 1/1 1 1 4m7s

deployment.apps/fleet-server-agent 1/1 1 1 116s

NAME DESIRED CURRENT READY AGE

replicaset.apps/eck-lab-kb-76c47d84bf 1 1 1 2m59s

replicaset.apps/fleet-server-agent-7c7b8865cd 1 1 1 89s

NAME READY AGE

statefulset.apps/eck-lab-es-default 3/3 5m55s

[SUMMARY] STACK INFO:

eck-lab elastic password: C8282E0wCq84rhCu2NNWKp66

eck-lab elasticsearch endpoint: https://35.202.53.54:9200

eck-lab kibana endpoint: https://34.72.32.122:5601

eck-lab Fleet Server endpoint: https://34.70.201.184:8220

[SUMMARY] ca.crt is located in /Users/jlim/eckstack/ca.crt

[NOTE] If you missed the summary its also in /Users/jlim/eckstack/notes

[NOTE] You can start logging into kibana but please give things few minutes for proper startup and letting components settle down.

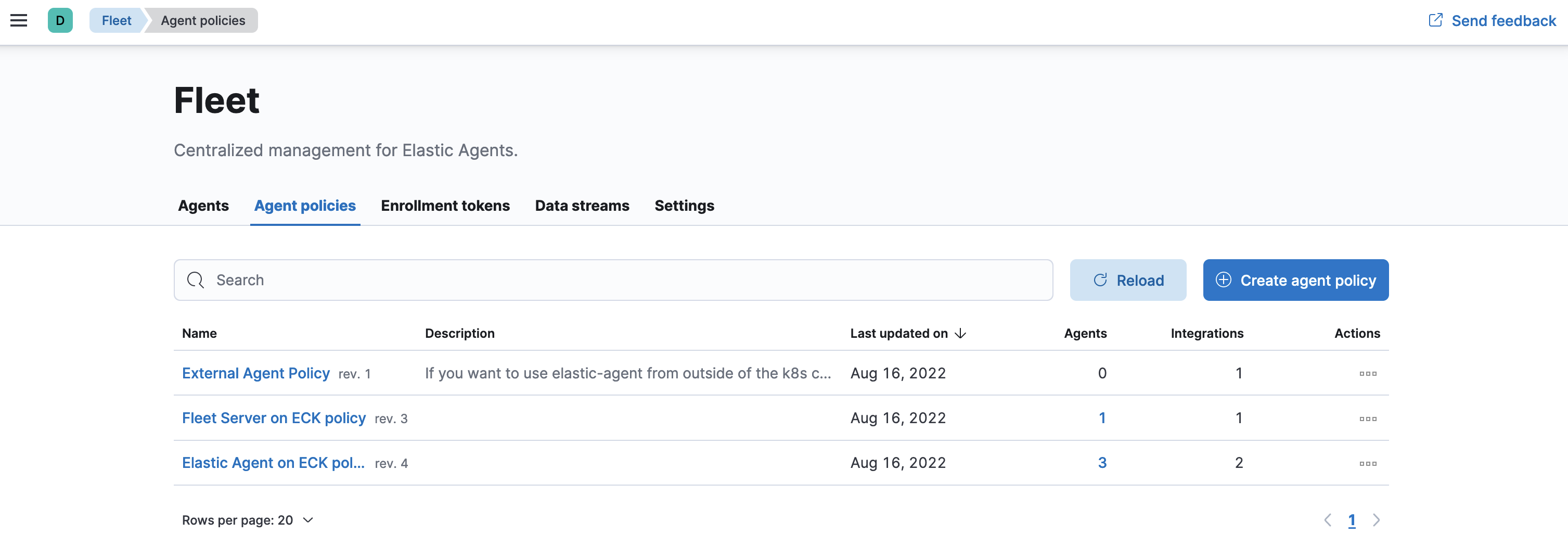

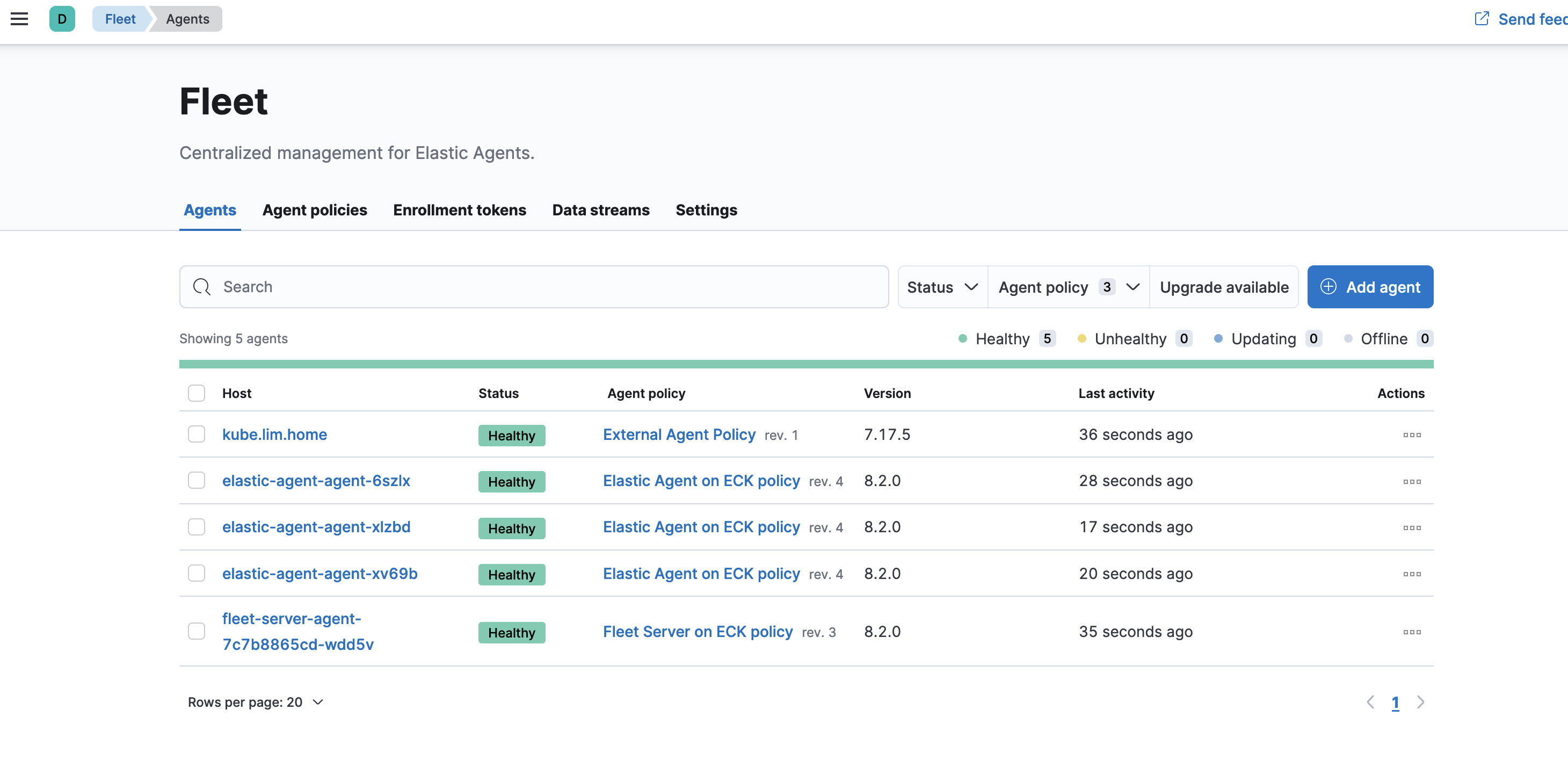

We can see that the Fleet server hosts was updated with both internal and external host url, and external outputs was added

External Agent Policy was also added

And we can see that th eoutput for integrations and agent monitoring is set for the external output.

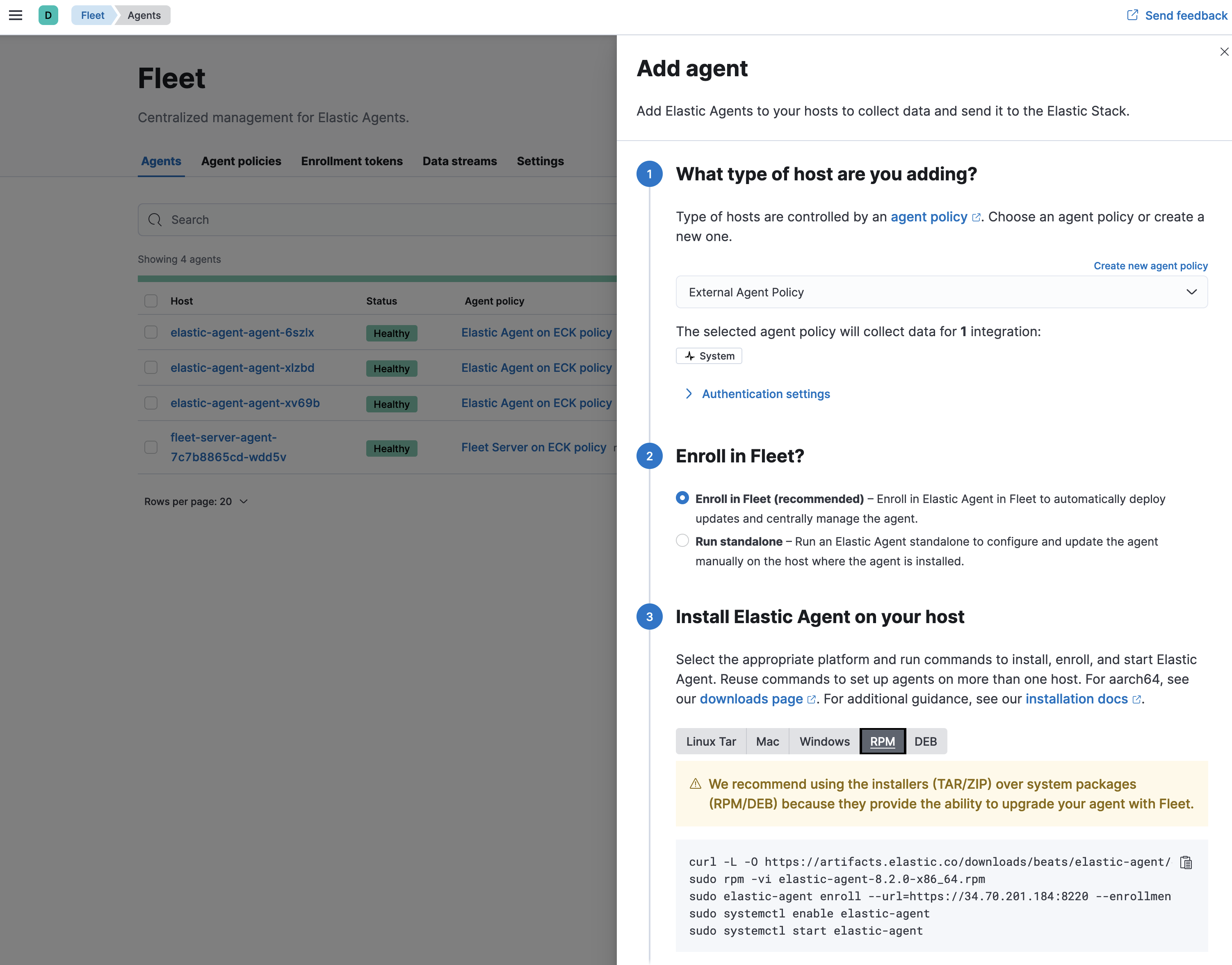

Lets go ahead and add an elastic-agent my Elastic stack and fleet server is on gke k8s environment and I will use the external output with External Agent Policy to register my home lab machine onto this cluster.

Since my environment is using self signed certificates, I will add the --insecure setting when enrolling

# sudo elastic-agent enroll --url=https://34.70.201.184:8220 --enrollment-token=azUyVnFZSUIwS0ZFMTJvQ1VGY2M6S2l4NnQ5MkpScU9kb3dTckRkV2hVdw== --insecure

This will replace your current settings. Do you want to continue? [Y/n]:y

2022-08-16T21:53:51.982-0500 WARN [tls] tlscommon/tls_config.go:101 SSL/TLS verifications disabled.

2022-08-16T21:53:52.315-0500 INFO cmd/enroll_cmd.go:454 Starting enrollment to URL: https://34.70.201.184:8220/

2022-08-16T21:53:52.418-0500 WARN [tls] tlscommon/tls_config.go:101 SSL/TLS verifications disabled.

2022-08-16T21:53:53.244-0500 INFO cmd/enroll_cmd.go:254 Successfully triggered restart on running Elastic Agent.

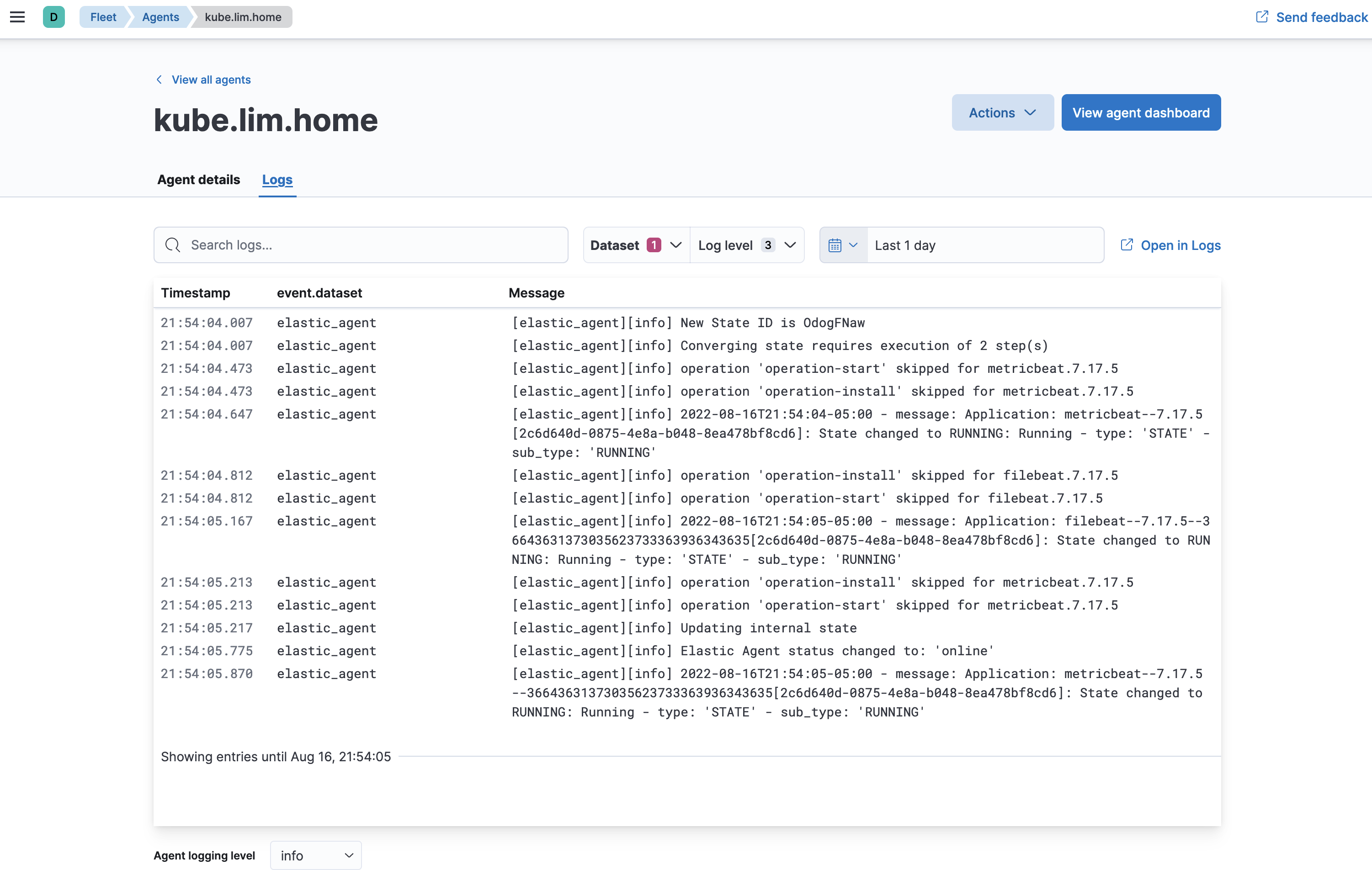

Successfully enrolled the Elastic Agent.And we can see that my lab machine from home can get to the fleet server on gke

and that we are ingesting

Also if you are having issues with elastic-agent please visit some common troubleshooting for elastic-agent on https://www.gooksu.com/2022/06/elastic-fleet-server-elastic-agent-common-troubleshooting/

Hello jlim0930, we have an issue when we want to use “public” certificate obtained by GoDaddy for external configuration.

As we are using Fleet server in container, it always fails by saying x509: certificate signed by unknown authority.

Do you maybe have some guide for using Fleet with public certs?

Tnx in advance

Vuk

Hello Vuk,

Set this environment variables on your fleet Container:

– name: FLEET_CA

value: “/usr/share/elastic-agent/certs/ca.crt”

– name: FLEET_URL

value: “https://fleet-server8-test.mydomain.com:443”

– name: FLEET_SERVER_CERT

value: “/usr/share/elastic-agent/certs/tls.crt”

– name: FLEET_SERVER_CERT_KEY

value: “/usr/share/elastic-agent/certs/tls.key”

Set and mount the corresponding secret for ca.crt, tls.crt and tls.key in your deployment.

and also configure your ingress to use the proper certificate.