Lets say you are running your elastic stack in k8s with ECK and you need to upgrade your environment, how would you do it and in what order?

- ECK operator

- elasticsearch

- kibana

- fleet-server

- elastic-agent

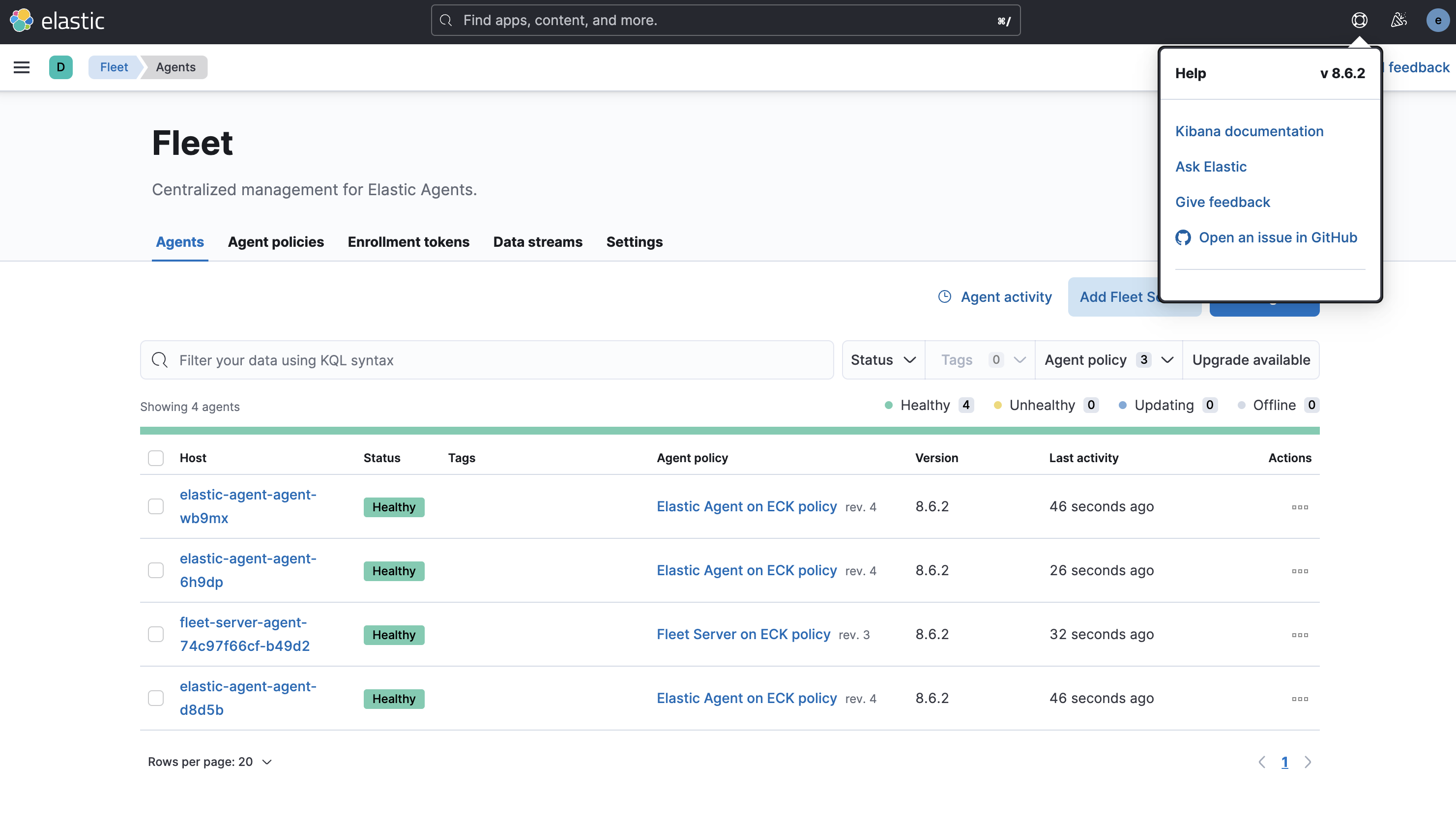

I will use my deploy-elastick8s.sh script to deploy a fleet deployment (elasticsearch + kibana + fleet server + elastic-agents running as daemonset). I will install ECK operator 2.7.0 and stack 8.6.2 and will upgrade to 8.7.0

> ./deploy-elastick8s.sh fleet 8.6.2 2.7.0

[DEBUG] jq found

[DEBUG] docker found & running

[DEBUG] kubectl found

[DEBUG] openssl found

[DEBUG] container image docker.elastic.co/elasticsearch/elasticsearch:8.6.2 is valid

[DEBUG] ECK 2.7.0 version validated.

[DEBUG] This might take a while. In another window you can watch -n2 kubectl get all or kubectl get events -w to watch the stack being stood up

********** Deploying ECK 2.7.0 OPERATOR **************

[DEBUG] ECK 2.7.0 downloading crds: crds.yaml

[DEBUG] ECK 2.7.0 downloading operator: operator.yaml

⠏ [DEBUG] Checking on the operator to become ready. If this does not finish in ~5 minutes something is wrong

NAME READY STATUS RESTARTS AGE

pod/elastic-operator-0 1/1 Running 0 3s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elastic-webhook-server ClusterIP 10.86.3.13 <none> 443/TCP 3s

NAME READY AGE

statefulset.apps/elastic-operator 1/1 3s

[DEBUG] ECK 2.7.0 Creating license.yaml

[DEBUG] ECK 2.7.0 Applying trial license

********** Deploying ECK 2.7.0 STACK 8.6.2 CLUSTER eck-lab **************

[DEBUG] ECK 2.7.0 STACK 8.6.2 CLUSTER eck-lab Creating elasticsearch.yaml

[DEBUG] ECK 2.7.0 STACK 8.6.2 CLUSTER eck-lab Starting elasticsearch cluster.

⠏ [DEBUG] Checking to ensure all readyReplicas(3) are ready for eck-lab-es-default. IF this does not finish in ~5 minutes something is wrong

NAME READY AGE

eck-lab-es-default 3/3 108s

[DEBUG] ECK 2.7.0 STACK 8.6.2 CLUSTER eck-lab Creating kibana.yaml

[DEBUG] ECK 2.7.0 STACK 8.6.2 CLUSTER eck-lab Starting kibana.

⠏ [DEBUG] Checking to ensure all readyReplicas(1) are ready for eck-lab-kb. IF this does not finish in ~5 minutes something is wrong

NAME READY UP-TO-DATE AVAILABLE AGE

eck-lab-kb 1/1 1 1 52s

[DEBUG] Grabbed elastic password for eck-lab: l1AHAJHgFk278GkZz053484N

[DEBUG] Grabbed elasticsearch endpoint for eck-lab: https://35.222.214.44:9200

[DEBUG] Grabbed kibana endpoint for eck-lab: https://34.69.122.156:5601

********** Deploying ECK 2.7.0 STACK 8.6.2 Fleet Server & elastic-agent **************

[DEBUG] Patching kibana to set fleet settings

[DEBUG] Sleeping for 60 seconds to wait for kibana to be updated with the patch

⠏ [DEBUG] Sleeping for 60 seconds waiting for kibana

[DEBUG] Creating fleet.yaml

[DEBUG] STACK VERSION: 8.6.2 Starting fleet-server & elastic-agents.

[DEBUG] Grabbing Fleet Server endpoint (external): https://<pending>:8220

[DEBUG] Grabbing Fleet Server endpoint (external): https://35.224.137.190:8220

[DEBUG] Waiting 30 seconds for fleet server to calm down to set the external output

[DEBUG] Setting Fleet Server URLs waiting for fleet server

[DEBUG] Setting Elasticsearch URL and CA Fingerprint

[DEBUG] Setting Fleet default output

[DEBUG] Output: external created. You can use this output for elastic-agent from outside of k8s cluster.

[DEBUG] Please create a new agent policy using the external output if you want to use elastic-agent from outside of k8s cluster.

[DEBUG] Please use https://35.224.137.190:8220 with --insecure to register your elastic-agent if you are coming from outside of k8s cluster.

[SUMMARY] ECK 2.7.0 STACK 8.6.2

NAME READY STATUS RESTARTS AGE

pod/eck-lab-es-default-0 1/1 Running 0 5m25s

pod/eck-lab-es-default-1 1/1 Running 0 5m25s

pod/eck-lab-es-default-2 1/1 Running 0 5m25s

pod/eck-lab-kb-54598dc788-2ckzp 1/1 Running 0 2m48s

pod/elastic-agent-agent-6h9dp 0/1 CrashLoopBackOff 2 (17s ago) 81s

pod/elastic-agent-agent-d8d5b 1/1 Running 1 (53s ago) 81s

pod/elastic-agent-agent-lfrtr 0/1 CrashLoopBackOff 2 (22s ago) 81s

pod/fleet-server-agent-74c97f66cf-wgwxk 1/1 Running 0 74s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/eck-lab-es-default ClusterIP None <none> 9200/TCP 5m35s

service/eck-lab-es-http LoadBalancer 10.86.1.95 35.222.214.44 9200:30749/TCP 5m37s

service/eck-lab-es-internal-http ClusterIP 10.86.0.254 <none> 9200/TCP 5m37s

service/eck-lab-es-transport ClusterIP None <none> 9300/TCP 5m37s

service/eck-lab-kb-http LoadBalancer 10.86.3.54 34.69.122.156 5601:30839/TCP 3m45s

service/fleet-server-agent-http LoadBalancer 10.86.3.21 35.224.137.190 8220:32000/TCP 106s

service/kubernetes ClusterIP 10.86.0.1 <none> 443/TCP 9h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/elastic-agent-agent 3 3 1 3 1 <none> 82s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/eck-lab-kb 1/1 1 1 3m44s

deployment.apps/fleet-server-agent 1/1 1 1 83s

NAME DESIRED CURRENT READY AGE

replicaset.apps/eck-lab-kb-54598dc788 1 1 1 2m48s

replicaset.apps/eck-lab-kb-7448c789db 0 0 0 3m44s

replicaset.apps/eck-lab-kb-c86874f64 0 0 0 3m14s

replicaset.apps/fleet-server-agent-74c97f66cf 1 1 1 74s

replicaset.apps/fleet-server-agent-fbdd85dc9 0 0 0 83s

NAME READY AGE

statefulset.apps/eck-lab-es-default 3/3 5m35s

[SUMMARY] STACK INFO:

eck-lab elastic password: l1AHAJHgFk278GkZz053484N

eck-lab elasticsearch endpoint: https://35.222.214.44:9200

eck-lab kibana endpoint: https://34.69.122.156:5601

eck-lab Fleet Server endpoint: https://35.224.137.190:8220

[SUMMARY] ca.crt is located in /Users/jlim/elastick8s/ca.crt

[NOTE] If you missed the summary its also in /Users/jlim/elastick8s/notes

[NOTE] You can start logging into kibana but please give things few minutes for proper startup and letting components settle down.

ECK operator upgrade

Upgrading the operator is fairly simple, and depending on what version you are upgrading from the steps will be different. Also depending on how the operator is updated it can cause a rolling restart of your managed resources. There are ways to avoid the the rolling restart. I will not include the ECK operator upgrade since you can run multiple versions of the stack under various versions of the operator and the documentation is very good and covers everything you need to upgrade the operator.

elasticsearch upgrade

BIG thanks for the eck operator, upgrading the stack is very easy.

From my deploy-elastick8s.sh script, our elasticsearch is deployed as eck-lab

> kubectl get elasticsearch

NAME HEALTH NODES VERSION PHASE AGE

eck-lab green 3 8.6.2 Ready 49mTo upgrade you can do something as simple as:

kubectl edit elasticsearch eck-laband look forversionunderspecand change the version from 8.6.2 to 8.7.0kubectl patch elasticsearch eck-lab --type='json' -p='[{"op": "replace", "path": "/spec/version", "value": "8.7.0"}]'- one liner instead.

Once this is done ECK will start a rolling upgrade of your nodes one by one to take down one instance and add a new 8.7.0 instance until all the nodes have been updated.

I've saved the events from this change and it is listed below

default 8m28s Normal Killing pod/eck-lab-es-default-2 Stopping container elasticsearch

default 7m36s Warning Unhealthy pod/eck-lab-es-default-2 Readiness probe failed: {"timestamp": "2023-05-04T03:12:14+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 7m34s Normal Scheduled pod/eck-lab-es-default-2 Successfully assigned default/eck-lab-es-default-2 to gke-justinlim-gke-default-pool-cf9a16c4-236w

default 7m25s Normal Pulled pod/eck-lab-es-default-2 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 7m25s Normal Created pod/eck-lab-es-default-2 Created container elastic-internal-init-filesystem

default 7m25s Normal Started pod/eck-lab-es-default-2 Started container elastic-internal-init-filesystem

default 7m23s Normal Pulled pod/eck-lab-es-default-2 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 7m23s Normal Created pod/eck-lab-es-default-2 Created container elastic-internal-suspend

default 7m23s Normal Started pod/eck-lab-es-default-2 Started container elastic-internal-suspend

default 7m22s Normal Started pod/eck-lab-es-default-2 Started container sysctl

default 7m22s Normal Created pod/eck-lab-es-default-2 Created container sysctl

default 7m22s Normal Pulled pod/eck-lab-es-default-2 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 7m21s Normal Started pod/eck-lab-es-default-2 Started container elasticsearch

default 7m21s Normal Created pod/eck-lab-es-default-2 Created container elasticsearch

default 7m21s Normal Pulled pod/eck-lab-es-default-2 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 7m9s Warning Unhealthy pod/eck-lab-es-default-2 Readiness probe failed: {"timestamp": "2023-05-04T03:12:41+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 7m4s Warning Unhealthy pod/eck-lab-es-default-2 Readiness probe failed: {"timestamp": "2023-05-04T03:12:46+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 7m Warning Unhealthy pod/eck-lab-es-default-2 Readiness probe failed: {"timestamp": "2023-05-04T03:12:50+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 6m55s Warning Unhealthy pod/eck-lab-es-default-2 Readiness probe failed: {"timestamp": "2023-05-04T03:12:55+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 6m50s Warning Unhealthy pod/eck-lab-es-default-2 Readiness probe failed: {"timestamp": "2023-05-04T03:13:00+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 6m45s Warning Unhealthy pod/eck-lab-es-default-2 Readiness probe failed: {"timestamp": "2023-05-04T03:13:05+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 6m40s Warning Unhealthy pod/eck-lab-es-default-2 Readiness probe failed: {"timestamp": "2023-05-04T03:13:10+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 6m35s Warning Unhealthy pod/eck-lab-es-default-2 Readiness probe failed: {"timestamp": "2023-05-04T03:13:15+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 6m30s Warning Unhealthy pod/eck-lab-es-default-2 Readiness probe failed: {"timestamp": "2023-05-04T03:13:20+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 6m15s Warning Unhealthy pod/eck-lab-es-default-2 (combined from similar events): Readiness probe failed: {"timestamp": "2023-05-04T03:13:35+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 5m31s Normal Killing pod/eck-lab-es-default-1 Stopping container elasticsearch

default 4m37s Normal Scheduled pod/eck-lab-es-default-1 Successfully assigned default/eck-lab-es-default-1 to gke-justinlim-gke-default-pool-17909339-47v3

default 4m28s Normal SuccessfulAttachVolume pod/eck-lab-es-default-1 AttachVolume.Attach succeeded for volume "pvc-7b0dcb2a-5729-4023-89f1-61b25dcb6def"

default 4m25s Normal Started pod/eck-lab-es-default-1 Started container elastic-internal-init-filesystem

default 4m25s Normal Created pod/eck-lab-es-default-1 Created container elastic-internal-init-filesystem

default 4m26s Normal Pulled pod/eck-lab-es-default-1 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 4m23s Normal Created pod/eck-lab-es-default-1 Created container elastic-internal-suspend

default 4m23s Normal Started pod/eck-lab-es-default-1 Started container elastic-internal-suspend

default 4m23s Normal Pulled pod/eck-lab-es-default-1 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 4m22s Normal Pulled pod/eck-lab-es-default-1 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 4m22s Normal Created pod/eck-lab-es-default-1 Created container sysctl

default 4m22s Normal Started pod/eck-lab-es-default-1 Started container sysctl

default 4m21s Normal Started pod/eck-lab-es-default-1 Started container elasticsearch

default 4m21s Normal Created pod/eck-lab-es-default-1 Created container elasticsearch

default 4m21s Normal Pulled pod/eck-lab-es-default-1 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 4m10s Warning Unhealthy pod/eck-lab-es-default-1 Readiness probe failed: {"timestamp": "2023-05-04T03:15:40+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 4m5s Warning Unhealthy pod/eck-lab-es-default-1 Readiness probe failed: {"timestamp": "2023-05-04T03:15:45+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 4m1s Warning Unhealthy pod/eck-lab-es-default-1 Readiness probe failed: {"timestamp": "2023-05-04T03:15:49+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 3m56s Warning Unhealthy pod/eck-lab-es-default-1 Readiness probe failed: {"timestamp": "2023-05-04T03:15:54+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 3m51s Warning Unhealthy pod/eck-lab-es-default-1 Readiness probe failed: {"timestamp": "2023-05-04T03:15:59+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 3m46s Warning Unhealthy pod/eck-lab-es-default-1 Readiness probe failed: {"timestamp": "2023-05-04T03:16:04+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 3m41s Warning Unhealthy pod/eck-lab-es-default-1 Readiness probe failed: {"timestamp": "2023-05-04T03:16:09+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 3m36s Warning Unhealthy pod/eck-lab-es-default-1 Readiness probe failed: {"timestamp": "2023-05-04T03:16:14+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 3m30s Warning Unhealthy pod/eck-lab-es-default-1 Readiness probe failed: {"timestamp": "2023-05-04T03:16:20+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 3m15s Warning Unhealthy pod/eck-lab-es-default-1 (combined from similar events): Readiness probe failed: {"timestamp": "2023-05-04T03:16:35+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 97s Normal Killing pod/eck-lab-es-default-0 Stopping container elasticsearch

default 97s Normal Scheduled pod/eck-lab-es-default-0 Successfully assigned default/eck-lab-es-default-0 to gke-justinlim-gke-default-pool-48cb1188-bl29

default 89s Normal Created pod/eck-lab-es-default-0 Created container elastic-internal-init-filesystem

default 89s Normal Pulled pod/eck-lab-es-default-0 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 88s Normal Started pod/eck-lab-es-default-0 Started container elastic-internal-init-filesystem

default 85s Normal Started pod/eck-lab-es-default-0 Started container elastic-internal-suspend

default 85s Normal Created pod/eck-lab-es-default-0 Created container elastic-internal-suspend

default 85s Normal Pulled pod/eck-lab-es-default-0 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 84s Normal Pulled pod/eck-lab-es-default-0 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 84s Normal Created pod/eck-lab-es-default-0 Created container sysctl

default 84s Normal Started pod/eck-lab-es-default-0 Started container sysctl

default 83s Normal Pulled pod/eck-lab-es-default-0 Container image "docker.elastic.co/elasticsearch/elasticsearch:8.7.0" already present on machine

default 83s Normal Created pod/eck-lab-es-default-0 Created container elasticsearch

default 83s Normal Started pod/eck-lab-es-default-0 Started container elasticsearch

default 68s Warning Unhealthy pod/eck-lab-es-default-0 Readiness probe failed: {"timestamp": "2023-05-04T03:18:42+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 64s Warning Unhealthy pod/eck-lab-es-default-0 Readiness probe failed: {"timestamp": "2023-05-04T03:18:46+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 59s Warning Unhealthy pod/eck-lab-es-default-0 Readiness probe failed: {"timestamp": "2023-05-04T03:18:51+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 54s Warning Unhealthy pod/eck-lab-es-default-0 Readiness probe failed: {"timestamp": "2023-05-04T03:18:56+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 49s Warning Unhealthy pod/eck-lab-es-default-0 Readiness probe failed: {"timestamp": "2023-05-04T03:19:01+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 44s Warning Unhealthy pod/eck-lab-es-default-0 Readiness probe failed: {"timestamp": "2023-05-04T03:19:06+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 39s Warning Unhealthy pod/eck-lab-es-default-0 Readiness probe failed: {"timestamp": "2023-05-04T03:19:11+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 34s Warning Unhealthy pod/eck-lab-es-default-0 Readiness probe failed: {"timestamp": "2023-05-04T03:19:16+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 29s Warning Unhealthy pod/eck-lab-es-default-0 Readiness probe failed: {"timestamp": "2023-05-04T03:19:21+00:00", "message": "readiness probe failed", "curl_rc": "7"}

default 24s Warning Unhealthy pod/eck-lab-es-default-0 (combined from similar events): Readiness probe failed: {"timestamp": "2023-05-04T03:19:26+00:00", "message": "readiness probe failed", "curl_rc": "7"}Also during the upgrade you can run something like

GET /_cat/nodes?v=true&h=id,ip,port,v,m to see the changes

id ip port v m

l8Jx 10.85.128.20 9300 8.6.2 -

SToa 10.85.130.17 9300 8.6.2 *

id ip port v m

Ae_H 10.85.129.20 9300 8.7.0 -

l8Jx 10.85.128.20 9300 8.6.2 -

SToa 10.85.130.17 9300 8.6.2 *

id ip port v m

Ae_H 10.85.129.20 9300 8.7.0 -

l8Jx 10.85.128.20 9300 8.6.2 *

SToa 10.85.130.22 9300 8.7.0 -

id ip port v m

l8Jx 10.85.128.24 9300 8.7.0 -

SToa 10.85.130.22 9300 8.7.0 *

Ae_H 10.85.129.20 9300 8.7.0 -> kubectl get elasticsearch

NAME HEALTH NODES VERSION PHASE AGE

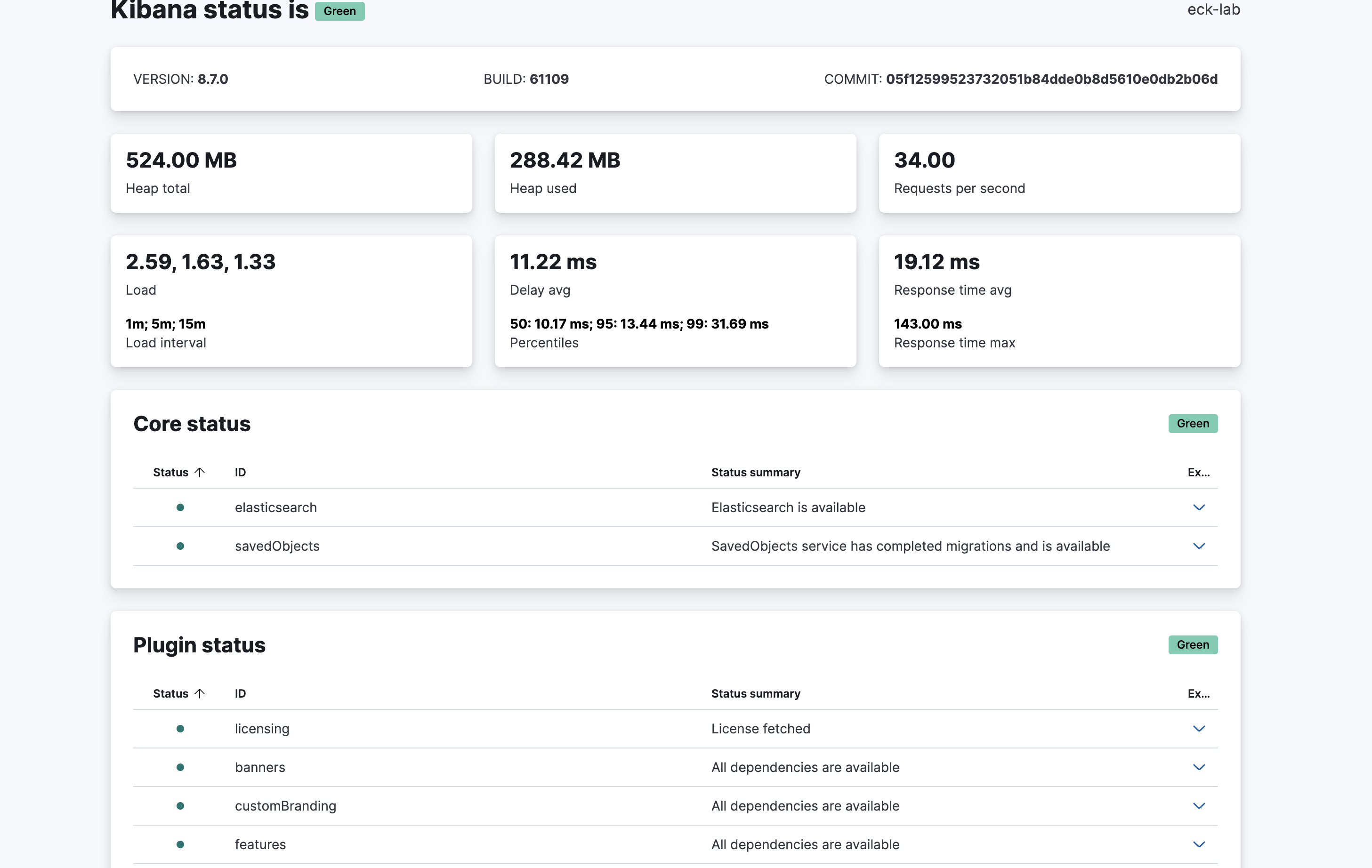

eck-lab green 3 8.7.0 Ready 77mkibana upgrade

Kibana upgrade is just as simple as elasticsearch:

To upgrade you can do something as simple as:

kubectl edit kibana eck-laband look forversionunderspecand change the version from 8.6.2 to 8.7.0kubectl patch kibana eck-lab --type='json' -p='[{"op": "replace", "path": "/spec/version", "value": "8.7.0"}]'- one liner.

Kibana upgrade will scale down the existing kibana to 0 instances then create a new replica set and set the # of replicas to what you had originally.

Events from this change is listed below:

0s Normal ScalingReplicaSet deployment/eck-lab-kb Scaled down replica set eck-lab-kb-54598dc788 to 0

0s Normal Killing pod/eck-lab-kb-54598dc788-2ckzp Stopping container kibana

0s Normal SuccessfulDelete replicaset/eck-lab-kb-54598dc788 Deleted pod: eck-lab-kb-54598dc788-2ckzp

0s Warning Unhealthy kibana/eck-lab Kibana health degraded

0s Warning Unhealthy pod/eck-lab-kb-54598dc788-2ckzp Readiness probe failed: Get "https://10.85.129.18:5601/login": dial tcp 10.85.129.18:5601: connect: connection refused

0s Warning Unhealthy pod/eck-lab-kb-54598dc788-2ckzp Readiness probe failed: Get "https://10.85.129.18:5601/login": dial tcp 10.85.129.18:5601: connect: connection refused

0s Normal ScalingReplicaSet deployment/eck-lab-kb Scaled up replica set eck-lab-kb-7547c87cc5 to 1

0s Normal Scheduled pod/eck-lab-kb-7547c87cc5-xvsj8 Successfully assigned default/eck-lab-kb-7547c87cc5-xvsj8 to gke-justinlim-gke-default-pool-cf9a16c4-236w

0s Normal SuccessfulCreate replicaset/eck-lab-kb-7547c87cc5 Created pod: eck-lab-kb-7547c87cc5-xvsj8

0s Warning Delayed agent/fleet-server Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/fleet-server Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/fleet-server Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/fleet-server Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Normal Pulled pod/eck-lab-kb-7547c87cc5-xvsj8 Container image "docker.elastic.co/kibana/kibana:8.7.0" already present on machine

0s Normal Created pod/eck-lab-kb-7547c87cc5-xvsj8 Created container elastic-internal-init-config

0s Normal Started pod/eck-lab-kb-7547c87cc5-xvsj8 Started container elastic-internal-init-config

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Normal Pulled pod/eck-lab-kb-7547c87cc5-xvsj8 Container image "docker.elastic.co/kibana/kibana:8.7.0" already present on machine

0s Normal Created pod/eck-lab-kb-7547c87cc5-xvsj8 Created container kibana

0s Normal Started pod/eck-lab-kb-7547c87cc5-xvsj8 Started container kibana

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Unhealthy pod/eck-lab-kb-7547c87cc5-xvsj8 Readiness probe failed: Get "https://10.85.129.21:5601/login": dial tcp 10.85.129.21:5601: connect: connection refused

0s Warning Delayed agent/elastic-agent Delaying deployment of Elastic Agent in Fleet Mode as Kibana is not available yet

0s Warning Unhealthy pod/eck-lab-kb-7547c87cc5-xvsj8 Readiness probe failed: Get "https://10.85.129.21:5601/login": dial tcp 10.85.129.21:5601: connect: connection refused

0s Warning ReconciliationError agent/elastic-agent Reconciliation error: failed to request https://eck-lab-kb-http.default.svc:5601/api/fleet/agent_policies?perPage=20&page=1, status is 503)

0s Warning Unhealthy pod/eck-lab-kb-7547c87cc5-xvsj8 Readiness probe failed: Get "https://10.85.129.21:5601/login": dial tcp 10.85.129.21:5601: connect: connection refused

0s Warning Unhealthy elasticsearch/eck-lab Elasticsearch cluster health degraded> kubectl get kibana

NAME HEALTH NODES VERSION AGE

eck-lab green 1 8.7.0 75mfleet-server upgrade

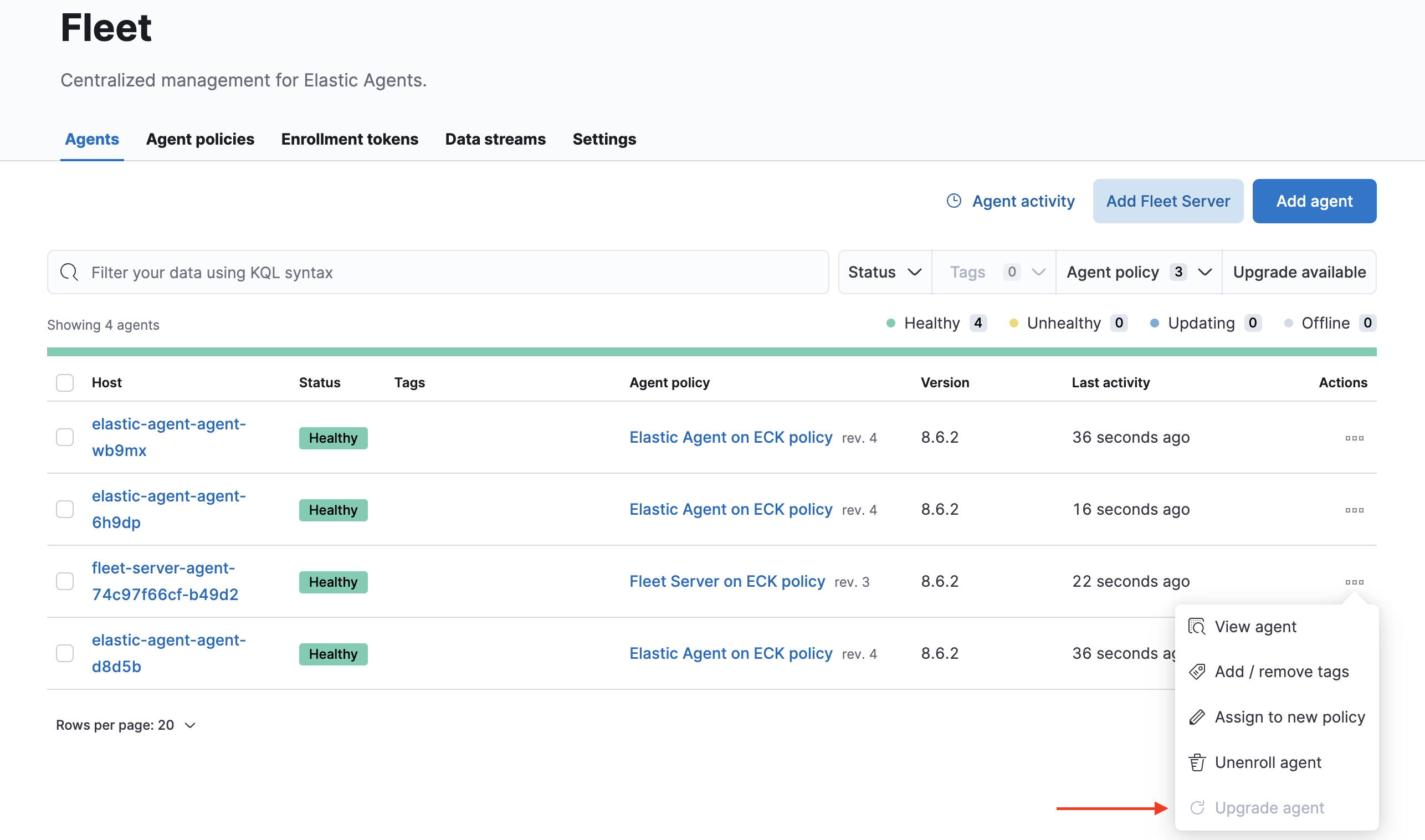

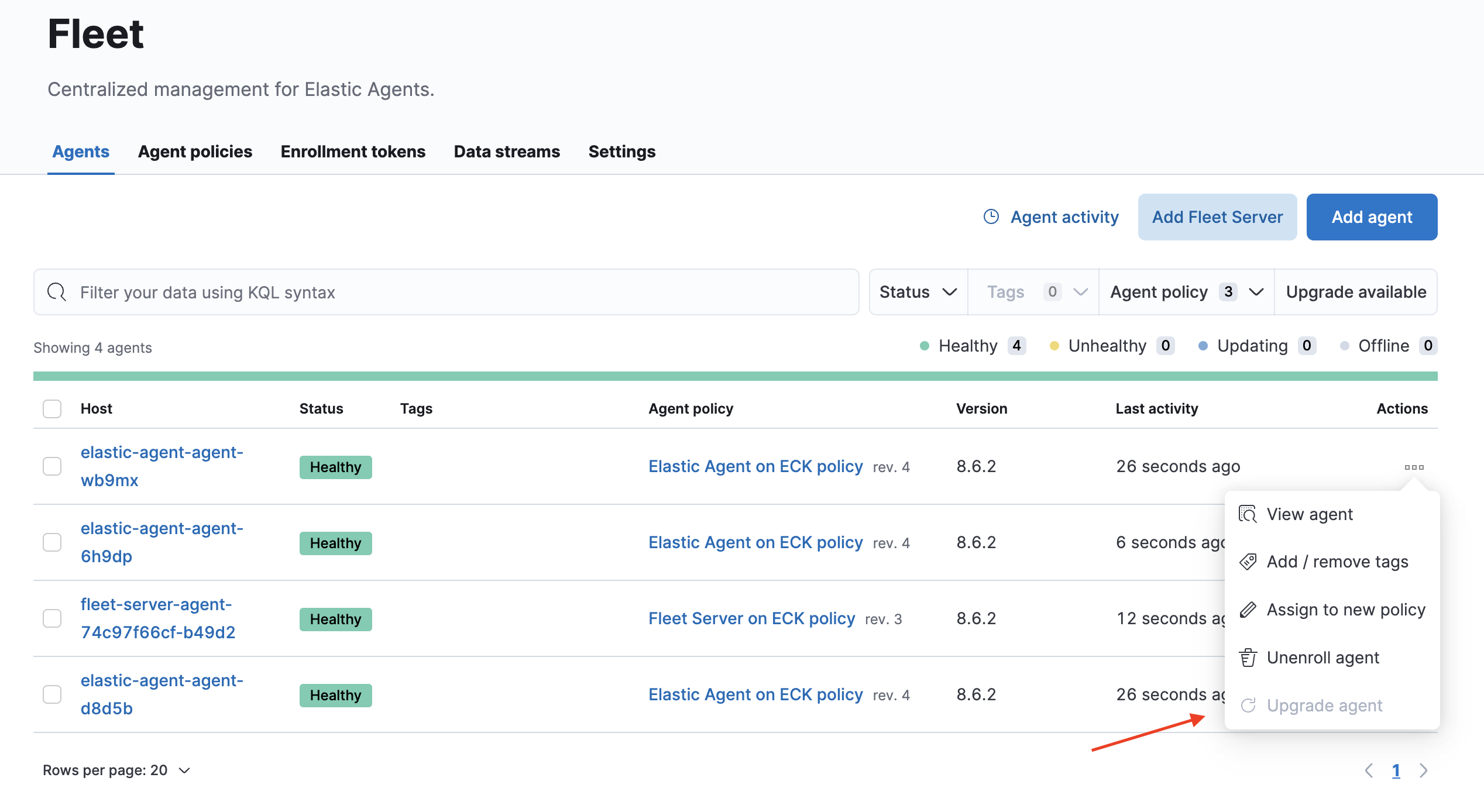

Normally if your fleet server is a stand alone then you can use the upgrade button, however if your fleet-server or elastic-agents are powered by k8s(running in k8s environment) then you wouldnt be able to upgrade the agent via the fleet UI

So you will need to perform the same type of change as you did with your elasticsearch and kibana. fleet-server is installed as Agent type which is a resource created by ECK.

> kubectl get agents

NAME HEALTH AVAILABLE EXPECTED VERSION AGE

elastic-agent green 3 3 8.6.2 72m

fleet-server green 1 1 8.6.2 72mTo upgrade you can do something as simple as:

kubectl edit agents fleet-serverand look forversionunderspecand change the version from 8.6.2 to 8.7.0kubectl patch agents fleet-server --type='json' -p='[{"op": "replace", "path": "/spec/version", "value": "8.7.0"}]'- one liner.

fleet-server upgrade will create a new replicaSet and scale it to the # of instances specified then scale down the old replicaSet to 0.

> kubectl get agents fleet-server

NAME HEALTH AVAILABLE EXPECTED VERSION AGE

fleet-server green 1 1 8.7.0 75melastic-agent upgrade

The same is true for elastic-agents. If its powered by k8s then you can not upgrade via the fleet UI

So you will need to perform the same type of upgrade.

To upgrade you can do something as simple as:

kubectl edit agents elastic-agentand look forversionunderspecand change the version from 8.6.2 to 8.7.0kubectl patch agents elastic-agent --type='json' -p='[{"op": "replace", "path": "/spec/version", "value": "8.7.0"}]'- one liner.

elastic-agent upgrades will create new pods in the DaemonSet and take down the old.

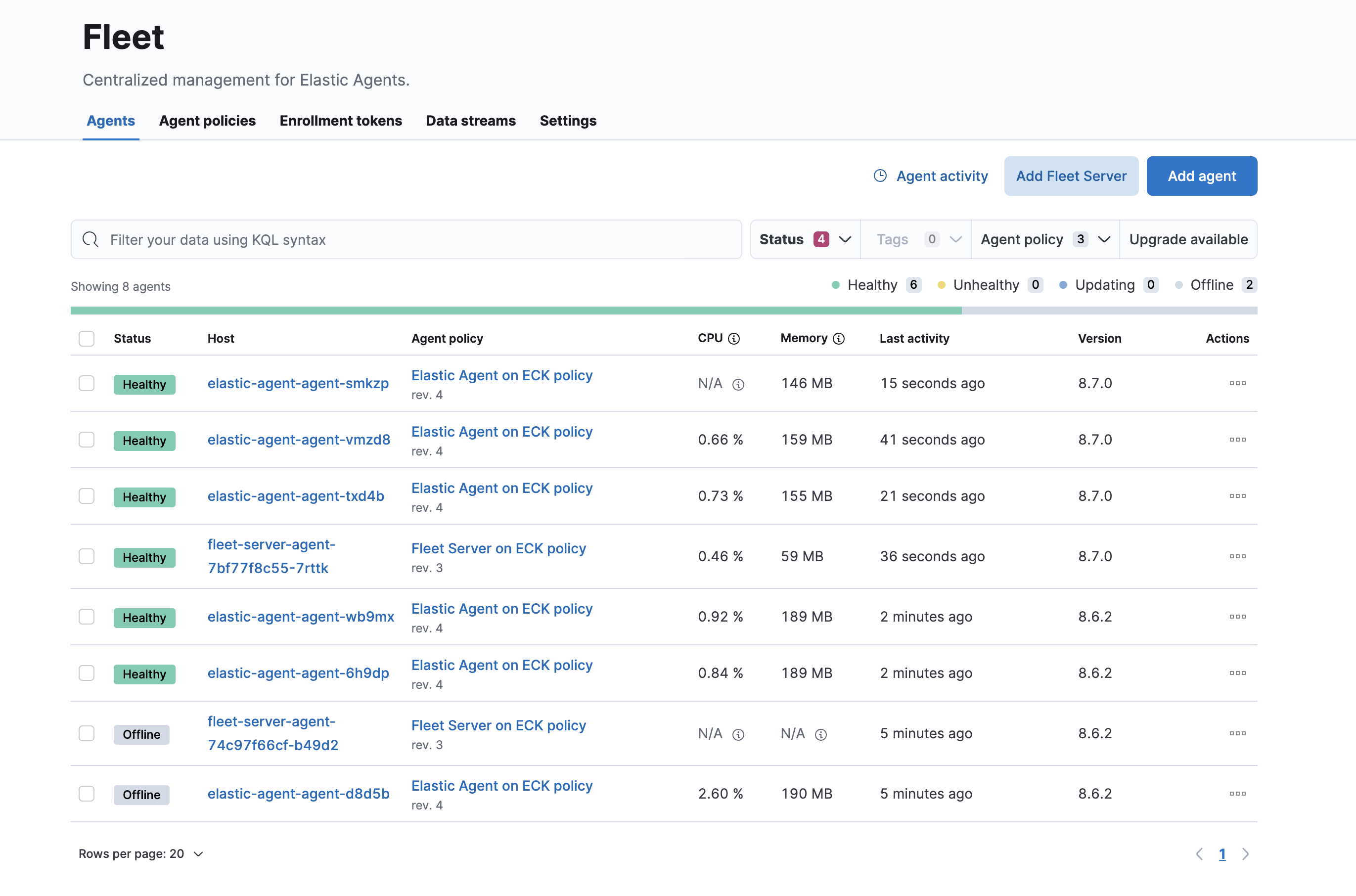

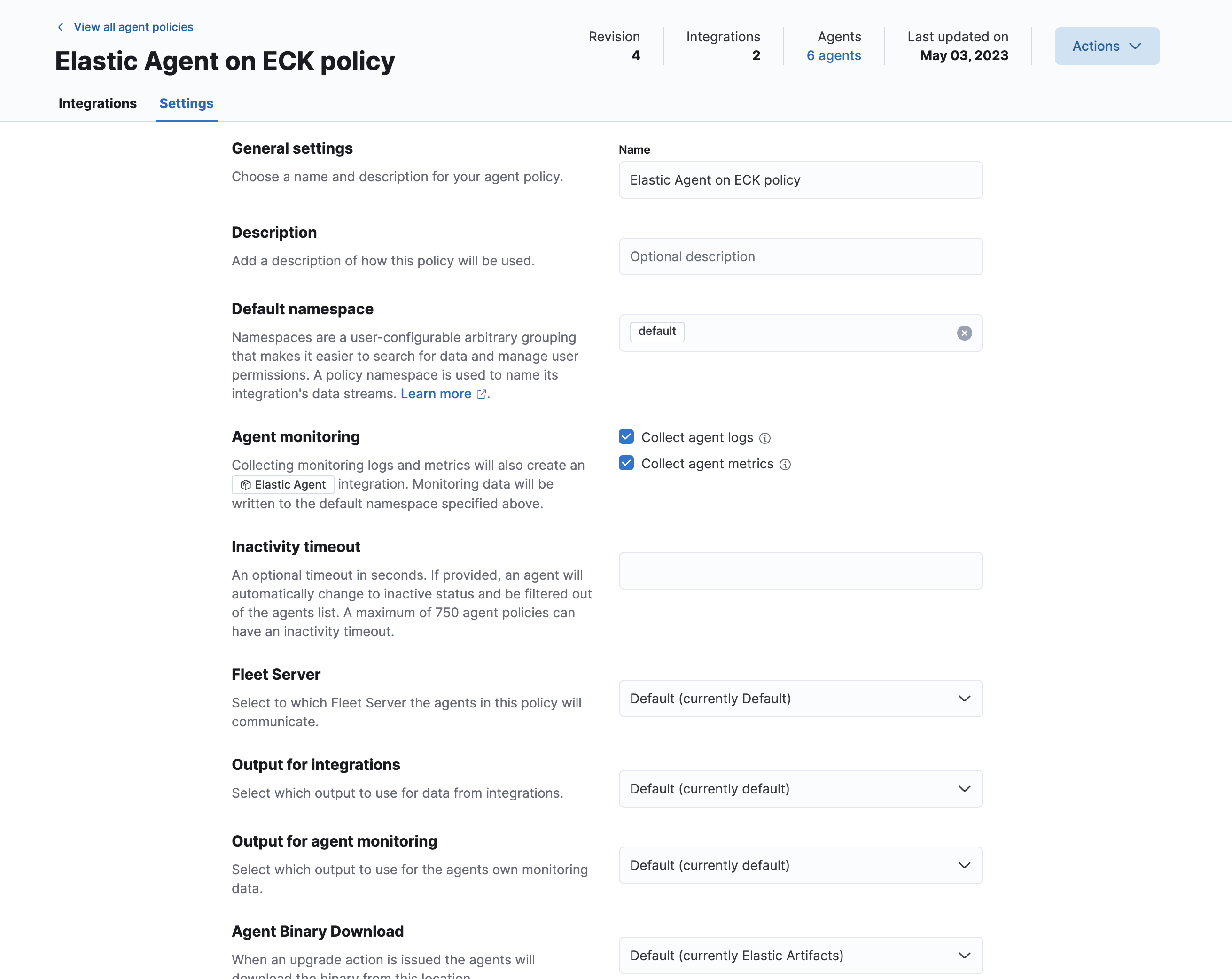

The old fleet server and elastic-agents will still show but will change to Offline. You can manually remove these or goto the Agent policies and change the Inactivity timeout to have it removed automatically after the time set

Hope this helps! and good luck on your upgrades. Always remember to have backups before making changes to your environment and always test things out in dev/testing before performing it on prod.