Elastic's Synthetics is still a beat feature but lately many are trying to use this but is having a hard time configuring it in containers and in kubernetes.

I've started a simple stack deployment using my deploy-elastick8s.sh script and added tested this out.

create the stack

configure heartbeat

- followed the documents from https://www.elastic.co/guide/en/beats/heartbeat/current/running-on-kubernetes.html and used https://raw.githubusercontent.com/elastic/beats/8.7/deploy/kubernetes/heartbeat-kubernetes.yaml to create heartbeats.

- added browser monitoring using https://www.elastic.co/guide/en/beats/heartbeat/8.7/monitor-browser-options.html

- ran into some issues since browser monitoring can not be ran with root user

- need to change so that heartbeat can be ran as heartbeat user

- found https://github.com/elastic/beats/issues/29465#issuecomment-1024975901 to add the

securityContextforspecand alsospec.containersand to change thedatafromhostPathtoemptyDir

My current configuration looks like

apiVersion: v1

kind: ServiceAccount

metadata:

name: heartbeat

namespace: kube-system

labels:

k8s-app: heartbeat

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: heartbeat

labels:

k8s-app: heartbeat

rules:

- apiGroups: [""]

resources:

- nodes

- namespaces

- pods

- services

verbs: ["get", "list", "watch"]

- apiGroups: ["apps"]

resources:

- replicasets

verbs: ["get", "list", "watch"]

- apiGroups: ["batch"]

resources:

- jobs

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: heartbeat

# should be the namespace where heartbeat is running

namespace: kube-system

labels:

k8s-app: heartbeat

rules:

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs: ["get", "create", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: heartbeat-kubeadm-config

namespace: kube-system

labels:

k8s-app: heartbeat

rules:

- apiGroups: [""]

resources:

- configmaps

resourceNames:

- kubeadm-config

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: heartbeat

subjects:

- kind: ServiceAccount

name: heartbeat

namespace: kube-system

roleRef:

kind: ClusterRole

name: heartbeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: heartbeat

namespace: kube-system

subjects:

- kind: ServiceAccount

name: heartbeat

namespace: kube-system

roleRef:

kind: Role

name: heartbeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: heartbeat-kubeadm-config

namespace: kube-system

subjects:

- kind: ServiceAccount

name: heartbeat

namespace: kube-system

roleRef:

kind: Role

name: heartbeat-kubeadm-config

apiGroup: rbac.authorization.k8s.io

---

apiVersion: v1

kind: ConfigMap

metadata:

name: heartbeat-deployment-config

namespace: kube-system

labels:

k8s-app: heartbeat

data:

heartbeat.yml: |-

heartbeat.monitors:

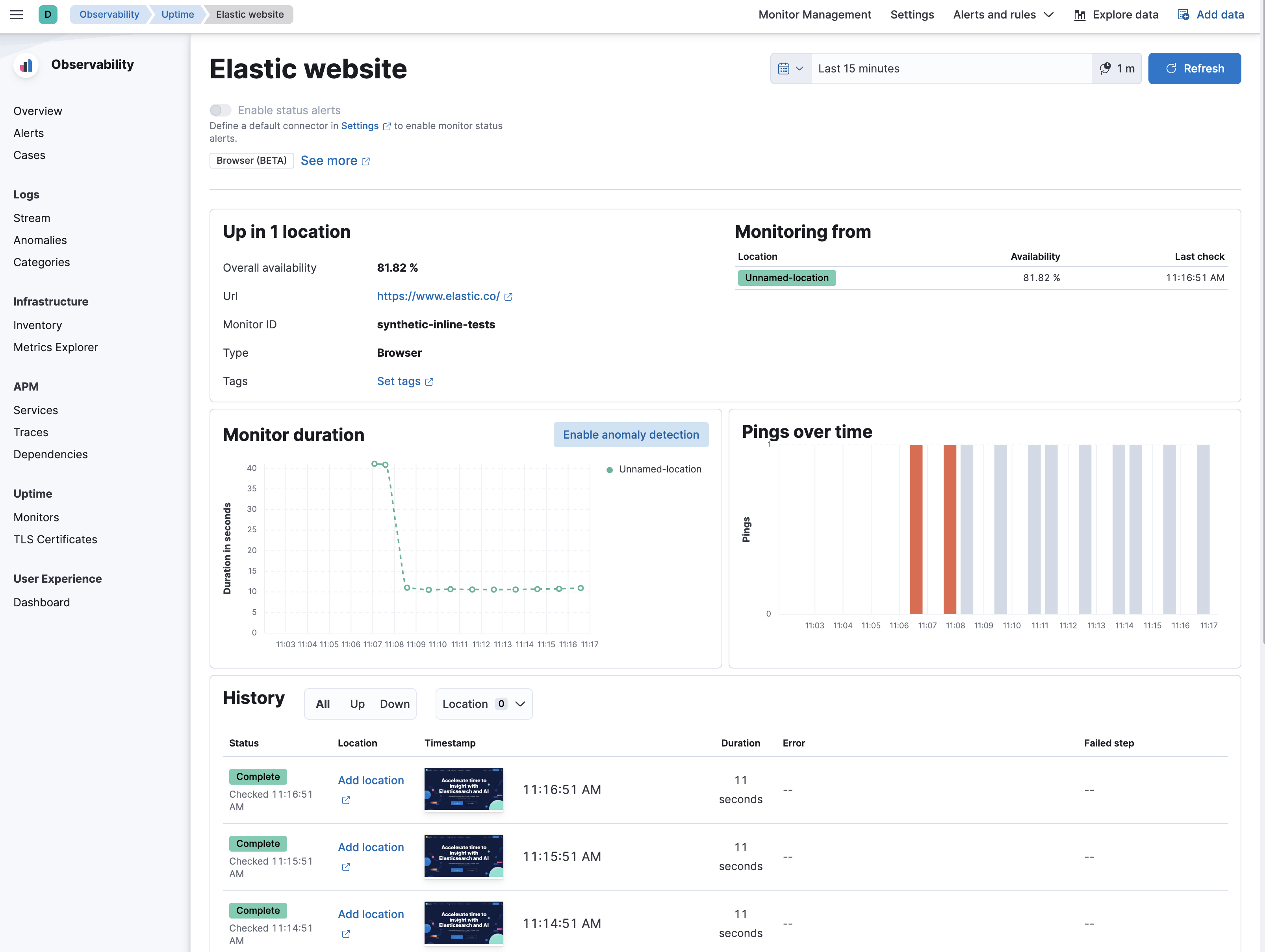

- type: browser

id: synthetic-inline-tests

name: Elastic website

schedule: '@every 1m'

source:

inline:

script: |-

step("load homepage", async () => {

await page.goto('https://www.elastic.co');

});

heartbeat.autodiscover: # Enable one or more of the providers below

providers:

- type: kubernetes

resource: pod

scope: cluster

node: ${NODE_NAME}

hints.enabled: true

- type: kubernetes

resource: service

scope: cluster

node: ${NODE_NAME}

hints.enabled: true

- type: kubernetes

resource: node

node: ${NODE_NAME}

scope: cluster

templates:

# Example, check SSH port of all cluster nodes:

- condition: ~

config:

- hosts:

- ${data.host}:22

name: ${data.kubernetes.node.name}

schedule: '@every 10s'

timeout: 5s

type: tcp

processors:

- add_cloud_metadata:

cloud.id: ${ELASTIC_CLOUD_ID}

cloud.auth: ${ELASTIC_CLOUD_AUTH}

output.elasticsearch:

hosts: ['https://${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

username: ${ELASTICSEARCH_USERNAME}

password: ${ELASTICSEARCH_PASSWORD}

ssl.verification_mode: none

---

# Deploy singleton instance in the whole cluster for some unique data sources, like kube-state-metrics

apiVersion: apps/v1

kind: Deployment

metadata:

name: heartbeat

namespace: kube-system

labels:

k8s-app: heartbeat

spec:

selector:

matchLabels:

k8s-app: heartbeat

template:

metadata:

labels:

k8s-app: heartbeat

spec:

serviceAccountName: heartbeat

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

securityContext:

fsGroup: 1000

containers:

- name: heartbeat

image: docker.elastic.co/beats/heartbeat:8.6.2

args: [

"-c", "/etc/heartbeat.yml",

"-e",

]

env:

- name: ELASTICSEARCH_HOST

value: xxxxxxx

- name: ELASTICSEARCH_PORT

value: "9200"

- name: ELASTICSEARCH_USERNAME

value: elastic

- name: ELASTICSEARCH_PASSWORD

value: xxxxxxxx

- name: ELASTIC_CLOUD_ID

value:

- name: ELASTIC_CLOUD_AUTH

value:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

securityContext:

privileged: false

allowPrivilegeEscalation: false

runAsNonRoot: true

runAsUser: 1000

runAsGroup: 1000

capabilities:

add: [ NET_RAW ]

resources:

limits:

memory: 1536Mi

requests:

# for synthetics, 2 full cores is a good starting point for relatively consistent perform of a single concurrent check

# For lightweight checks as low as 100m is fine

cpu: 2000m

# A high value like this is encouraged for browser based monitors.

# Lightweight checks use substantially less, even 128Mi is fine for those.

memory: 1536Mi

volumeMounts:

- name: config

mountPath: /etc/heartbeat.yml

readOnly: true

subPath: heartbeat.yml

- name: data

mountPath: /usr/share/heartbeat/data

volumes:

- name: config

configMap:

defaultMode: 0644

name: heartbeat-deployment-config

- name: data

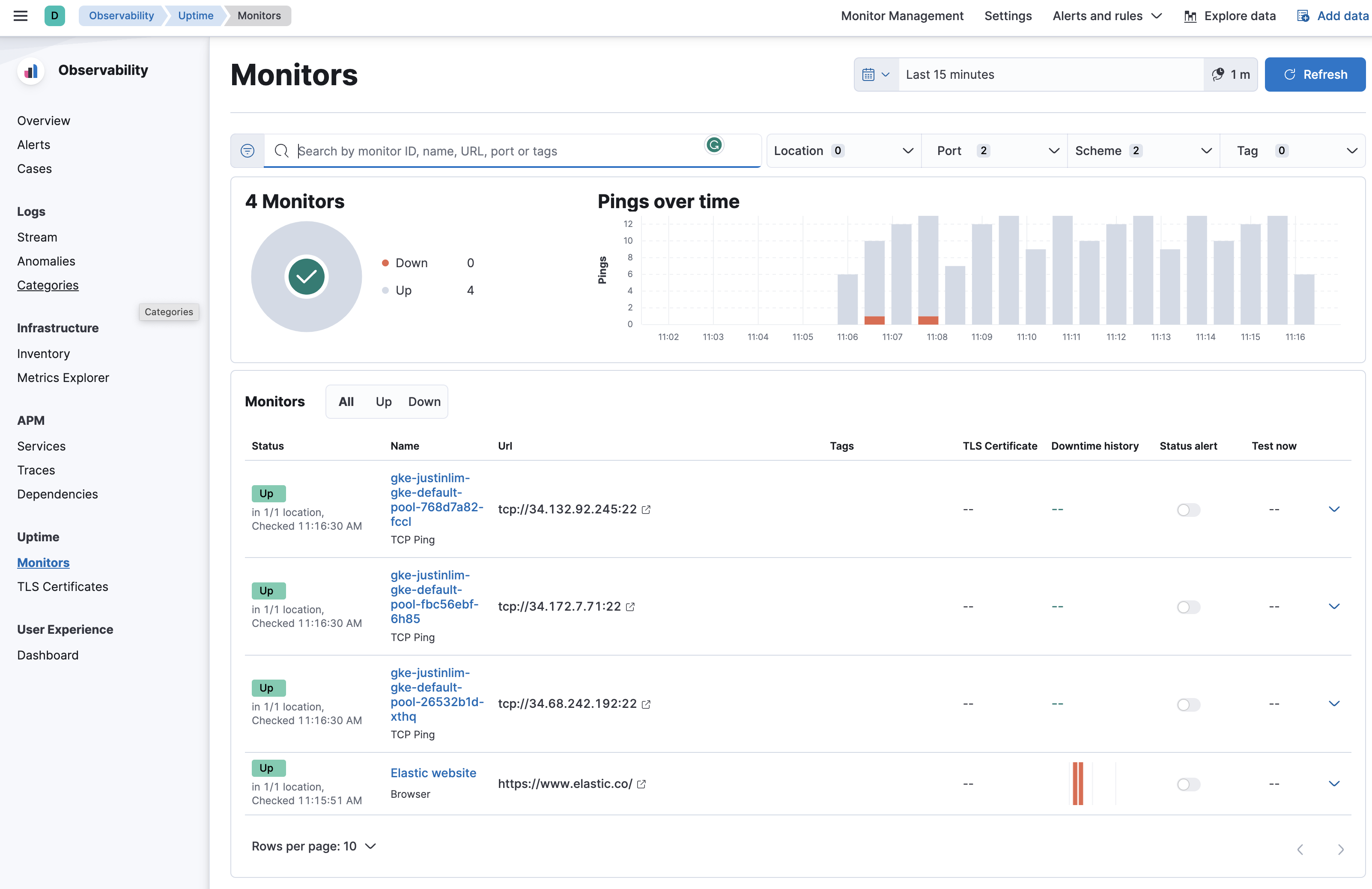

emptyDir: {}started up my monitoring and in kibana it shows

why still got permission denied when creating .synthetic folder inside pod?