For this example I will stand up a very simple minio server on my localhost. Create kubernetes secrets for the s3.client.default.access_key and s3.client.default.secret_key. Configure my elasticsearch pod with initContainer to install the repository-s3 plugin and secureSettings to create the keystore.

minio server

This is a very simple, not secure setup just for testing

$ mkdir data

$ wget https://dl.min.io/server/minio/release/linux-amd64/minio

$ chmod +x minio

$ ./minio server ./data

API: http://192.168.1.251:9000 http://172.17.0.1:9000 http://192.168.122.1:9000 http://192.168.49.1:9000 http://127.0.0.1:9000

RootUser: minioadmin

RootPass: minioadmin

Console: http://192.168.1.251:36012 http://172.17.0.1:36012 http://192.168.122.1:36012 http://192.168.49.1:36012 http://127.0.0.1:36012

RootUser: minioadmin

RootPass: minioadmin

Command-line: https://docs.min.io/docs/minio-client-quickstart-guide

$ mc alias set myminio http://192.168.1.251:9000 minioadmin minioadmin

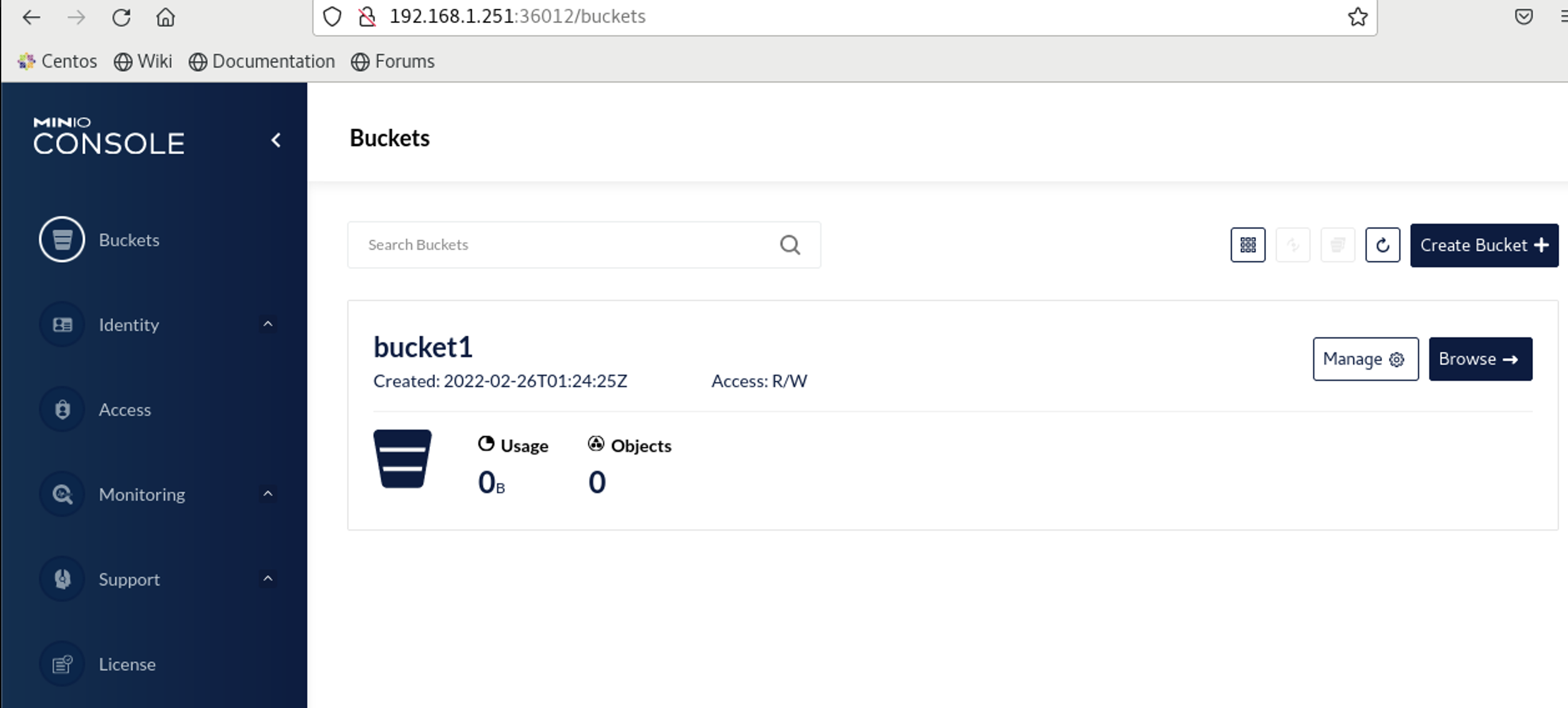

Instead of getting mc I am just going to browse to my minio GUI and create a bucket

Creating secrets

We can create kubernetes secrets in many many ways. The most simple way is to do it literally

$ kubectl create secret generic s3-creds --from-literal=s3.client.default.access_key='minioadmin' --from-literal=s3.client.default.secret_key='minioadmin'

secret/s3-creds createdAlternatively, you can create yaml files for this and apply it

$ cat s3.yaml

apiVersion: v1

kind: Secret

metadata:

name: s3-creds

type: Opaque

data:

s3.client.default.access_key: bWluaW9hZG1pbg==

s3.client.default.secret_key: bWluaW9hZG1pbg==

$ kubectl apply -f s3.yamlAlternatively, you can even use stringData

$ cat s3.yaml

apiVersion: v1

kind: Secret

metadata:

name: s3-creds

type: Opaque

stringData:

s3.client.default.access_key: minioadmin

s3.client.default.secret_key: minioadmin

$ kubectl apply -f s3.yamlWe can check for our secret by :

$ kubectl describe secrets s3-creds

Name: s3-creds

Namespace: default

Labels: <none>

Annotations: <none>

Type: Opaque

Data

====

access_key: 10 bytes

secret_key: 10 bytes

$ kubectl get secrets s3-creds -o go-template='{{index .data "s3.client.default.access_key" | base64decode}}'

minioadmin

$ kubectl get secrets s3-creds -o go-template='{{index .data "s3.client.default.secret_key" | base64decode}}'

minioadminCreate elasticsearch deployment with initContainer and keystore

I will create an elasticsearch resource and add an initContainer to install the repository-s3 plugin and also use the secrets to create the keystore

my manifest:

---

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: elasticsearch

spec:

version: 7.17.0

nodeSets:

- name: default

count: 1

config:

node.store.allow_mmap: false

podTemplate:

spec:

initContainers:

- name: install-plugins

command:

- sh

- -c

- |

bin/elasticsearch-plugin install --batch repository-s3

metadata:

labels:

scrape: es

secureSettings:

- secretName: s3-credsSIDE NOTE:

If you wanted to rename the path of the secret into a different keystore path you can do something below. The k8s secret is stored as access_key & secret_key but I am renaming it to be s3.client.default.access_key & s3.client.default.secret_key in my keystore

entries:

- key: access_key

path: s3.client.default.access_key

- key: secret_key

path: s3.client.default.secret_keyDeploy:

$ kubectl apply -f prod-es.yaml; kubectl get events -w

elasticsearch.elasticsearch.k8s.elastic.co/elasticsearch created

0s Normal NoPods poddisruptionbudget/elasticsearch-es-default No matching pods found

0s Normal NoPods poddisruptionbudget/elasticsearch-es-default No matching pods found

0s Normal SuccessfulCreate statefulset/elasticsearch-es-default create Claim elasticsearch-data-elasticsearch-es-default-0 Pod elasticsearch-es-default-0 in StatefulSet elasticsearch-es-default success

0s Normal ExternalProvisioning persistentvolumeclaim/elasticsearch-data-elasticsearch-es-default-0 waiting for a volume to be created, either by external provisioner "k8s.io/minikube-hostpath" or manually created by system administrator

0s Normal Provisioning persistentvolumeclaim/elasticsearch-data-elasticsearch-es-default-0 External provisioner is provisioning volume for claim "default/elasticsearch-data-elasticsearch-es-default-0"

0s Normal SuccessfulCreate statefulset/elasticsearch-es-default create Pod elasticsearch-es-default-0 in StatefulSet elasticsearch-es-default successful

0s Warning FailedScheduling pod/elasticsearch-es-default-0 0/1 nodes are available: 1 pod has unbound immediate PersistentVolumeClaims.

0s Normal ProvisioningSucceeded persistentvolumeclaim/elasticsearch-data-elasticsearch-es-default-0 Successfully provisioned volume pvc-783986ac-cdfe-43e2-9083-e6a5a15a4d76

0s Normal Scheduled pod/elasticsearch-es-default-0 Successfully assigned default/elasticsearch-es-default-0 to minikube

0s Normal Pulled pod/elasticsearch-es-default-0 Container image "docker.elastic.co/elasticsearch/elasticsearch:7.17.0" already present on machine

0s Normal Created pod/elasticsearch-es-default-0 Created container elastic-internal-init-filesystem

0s Normal Started pod/elasticsearch-es-default-0 Started container elastic-internal-init-filesystem

0s Normal Pulled pod/elasticsearch-es-default-0 Container image "docker.elastic.co/elasticsearch/elasticsearch:7.17.0" already present on machine

0s Normal Created pod/elasticsearch-es-default-0 Created container elastic-internal-init-keystore

0s Normal Started pod/elasticsearch-es-default-0 Started container elastic-internal-init-keystore

0s Normal Pulled pod/elasticsearch-es-default-0 Container image "docker.elastic.co/elasticsearch/elasticsearch:7.17.0" already present on machine

0s Normal Created pod/elasticsearch-es-default-0 Created container elastic-internal-suspend

0s Normal Started pod/elasticsearch-es-default-0 Started container elastic-internal-suspend

0s Normal Pulled pod/elasticsearch-es-default-0 Container image "docker.elastic.co/elasticsearch/elasticsearch:7.17.0" already present on machine

0s Normal Created pod/elasticsearch-es-default-0 Created container install-plugins

0s Normal Started pod/elasticsearch-es-default-0 Started container install-plugins

0s Normal Pulled pod/elasticsearch-es-default-0 Container image "docker.elastic.co/elasticsearch/elasticsearch:7.17.0" already present on machine

0s Normal Created pod/elasticsearch-es-default-0 Created container elasticsearch

0s Normal Started pod/elasticsearch-es-default-0 Started container elasticsearchLets go in and look at our keystore

$ kubectl exec -it elasticsearch-es-default-0 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "elasticsearch" out of: elasticsearch, elastic-internal-init-filesystem (init), elastic-internal-init-keystore (init), elastic-internal-suspend (init), install-plugins (init)

root@elasticsearch-es-default-0:/usr/share/elasticsearch# bin/elasticsearch-keystore list

keystore.seed

s3.client.default.access_key

s3.client.default.secret_keyEverything looks great!

Test

We will test this by creating a repository and taking a snapshot

Grab the elastic users password

$ kubectl get secret elasticsearch-es-elastic-user -o go-template='{{.data.elastic | base64decode}}'

FD4yJQ21n9Mziy0h6wn7f848Log into kibana and goto devtools and put in

PUT _snapshot/minio

{

"type": "s3",

"settings": {

"bucket": "bucket1",

"endpoint": "http://192.168.1.251:9000",

"path_style_access": "true"

}

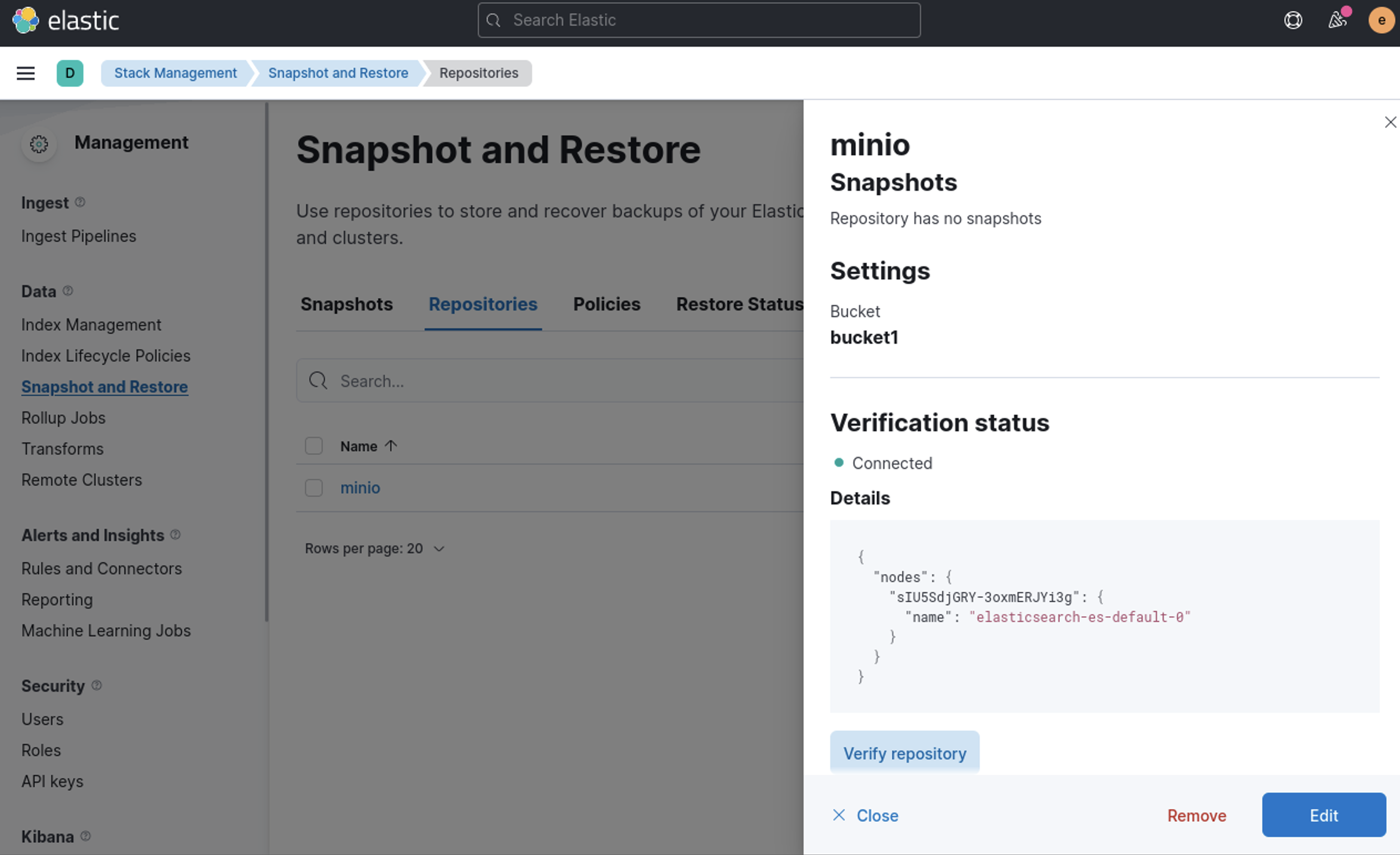

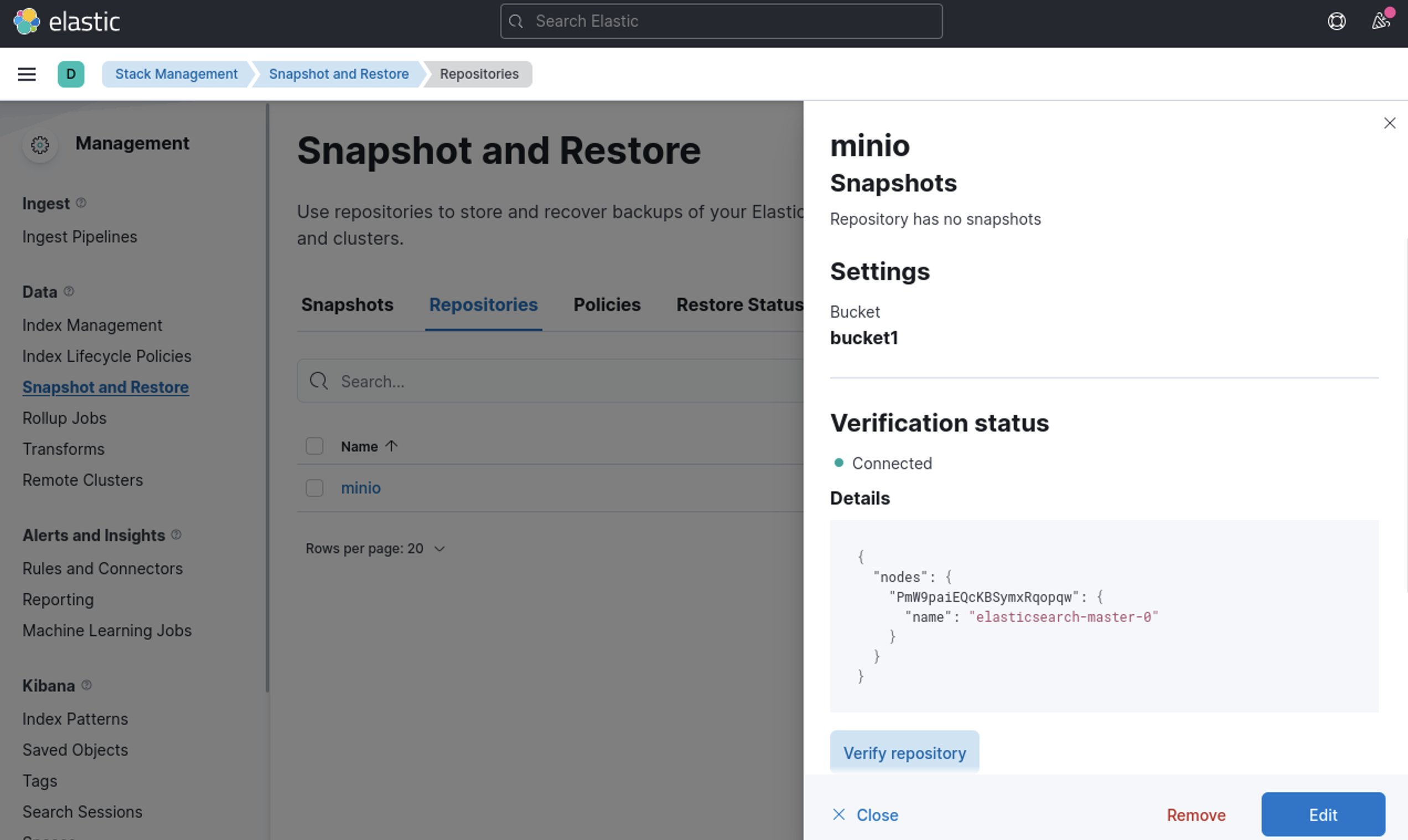

}Now goto Stack Management -> Snapshot and Restore -> Repositories - > minio -> verify repository

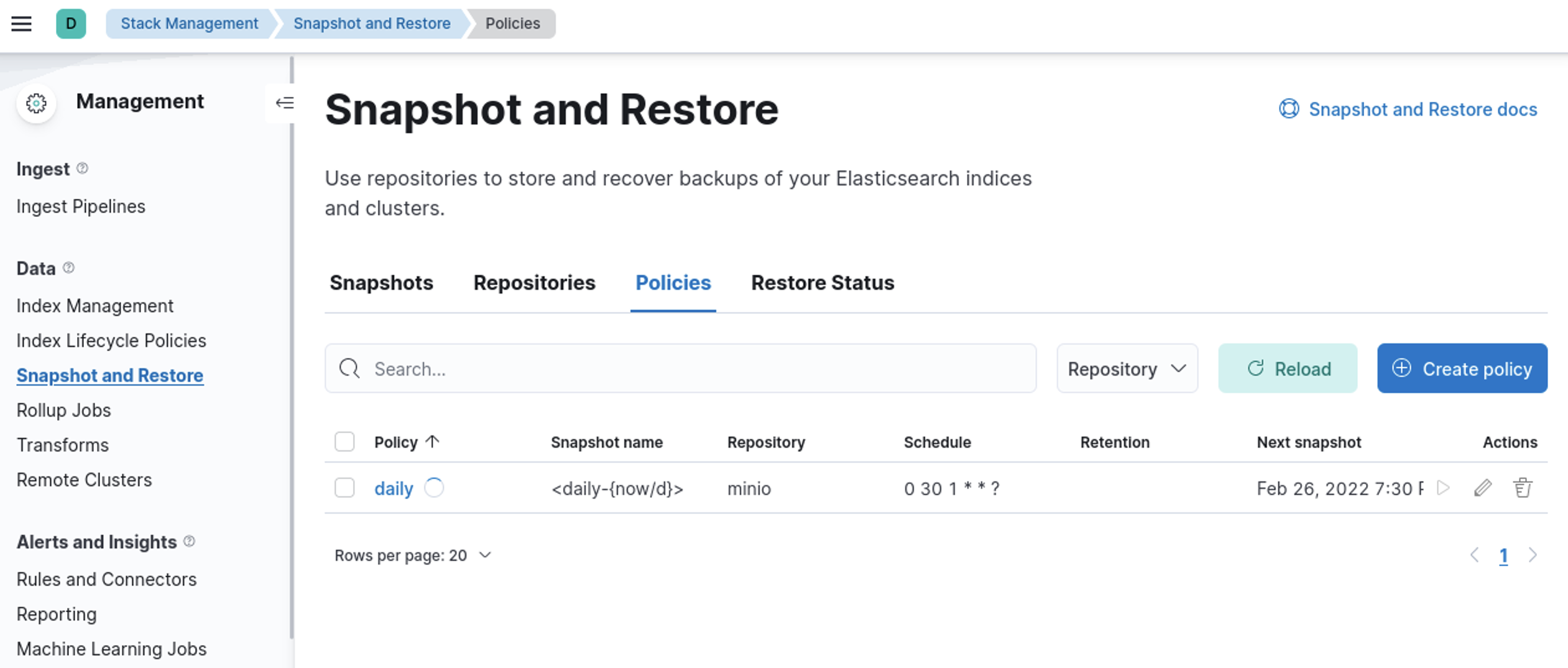

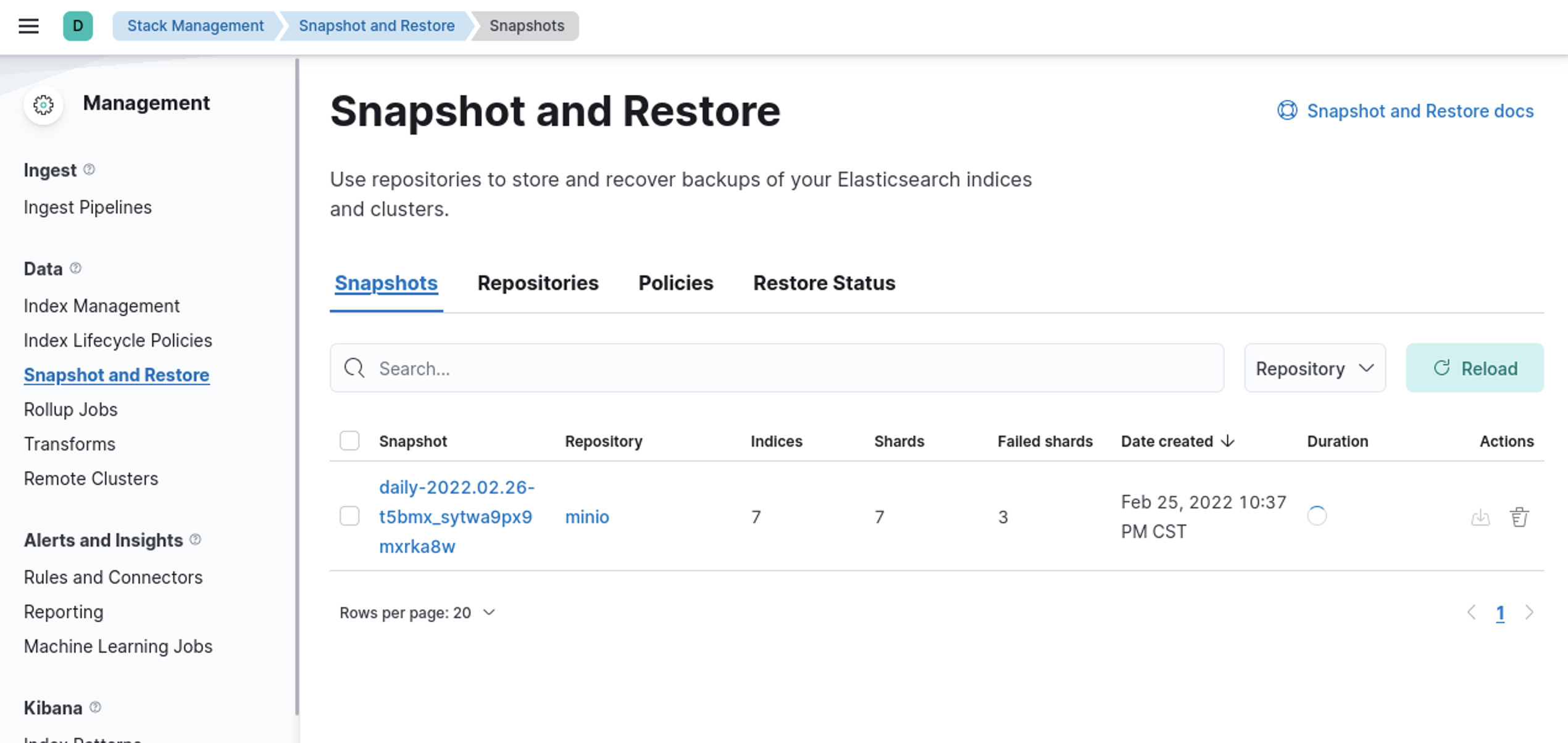

Awesome! lets create a policy and take a snapshot

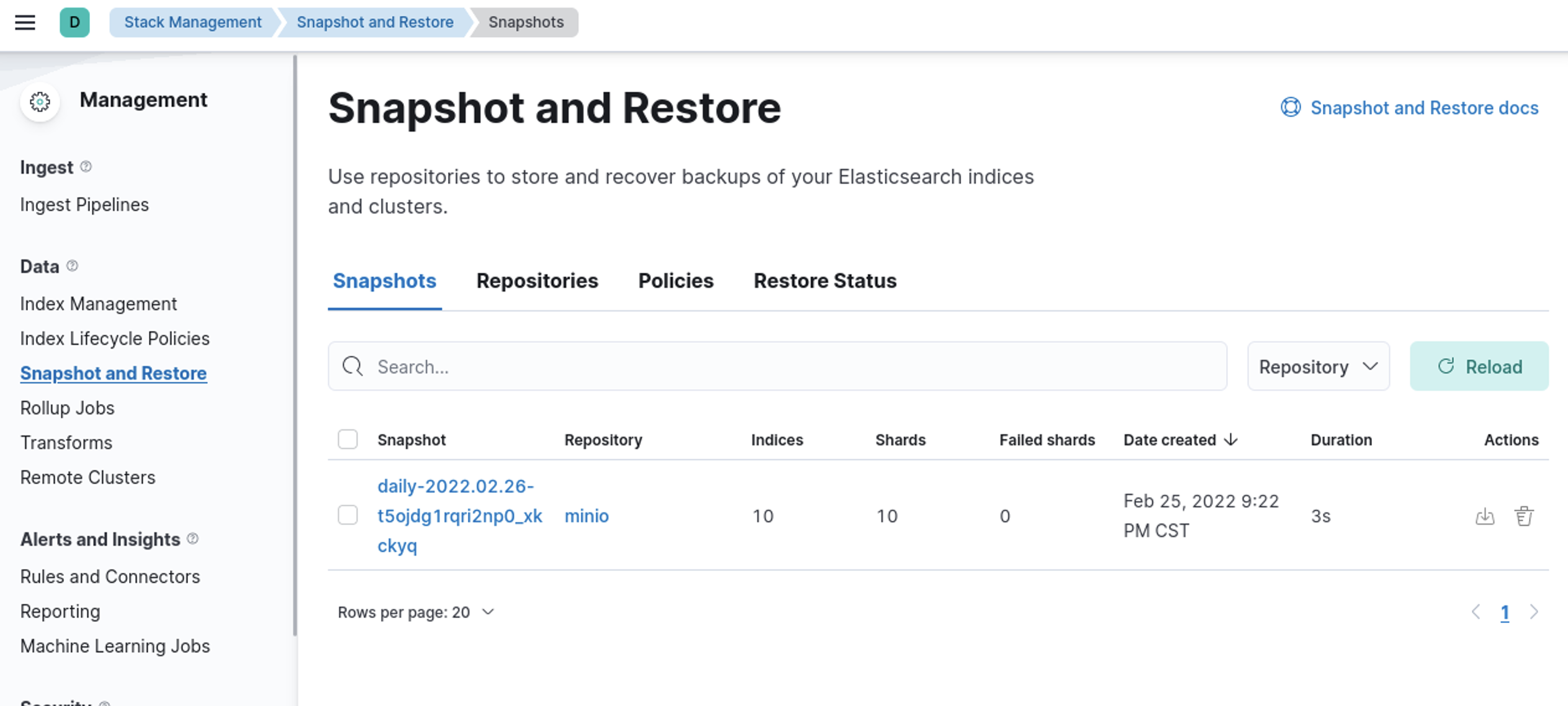

And we have snapshots!!

Lets do this in HELM as well

Create our secret

$ kubectl create secret generic s3-creds --from-literal=s3.client.default.access_key='minioadmin' --from-literal=s3.client.default.secret_key='minioadmin'

secret/s3-creds createdCreate my local container image with the plugin installed - my environment is in minikube so I will need to minikube ssh to build the image

$ minikube ssh

docker@minikube:~$ mkdir a]

docker@minikube:~$ cd a

docker@minikube:~/a$ cat > Dockerfile<<EOF

> FROM docker.elastic.co/elasticsearch/elasticsearch:7.17.0

> RUN bin/elasticsearch-plugin install --batch repository-s3

> EOF

docker@minikube:~/a$ cat Dockerfile

FROM docker.elastic.co/elasticsearch/elasticsearch:7.17.0

RUN bin/elasticsearch-plugin install --batch repository-s3

docker@minikube:~/a$ docker build -t es .

Sending build context to Docker daemon 2.048kB

Step 1/2 : FROM docker.elastic.co/elasticsearch/elasticsearch:7.17.0

---> 6fe993d6e7ed

Step 2/2 : RUN bin/elasticsearch-plugin install --batch repository-s3

---> Running in 3828eb6c07b7

-> Installing repository-s3

-> Downloading repository-s3 from elastic

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: plugin requires additional permissions @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

* java.lang.RuntimePermission accessDeclaredMembers

* java.lang.RuntimePermission getClassLoader

* java.lang.reflect.ReflectPermission suppressAccessChecks

* java.net.SocketPermission * connect,resolve

* java.util.PropertyPermission es.allow_insecure_settings read,write

See https://docs.oracle.com/javase/8/docs/technotes/guides/security/permissions.html

for descriptions of what these permissions allow and the associated risks.

-> Installed repository-s3

-> Please restart Elasticsearch to activate any plugins installed

Removing intermediate container 3828eb6c07b7

---> 86015a112bfe

Successfully built 86015a112bfe

Successfully tagged es:latest

docker@minikube:~/a$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

es latest 86015a112bfe 3 seconds ago 618MB

We've created a custom container image named es:latest that has our plugin installed

my values.yaml file

clusterName: "elasticsearch"

nodeGroup: "master"

masterService: ""

roles:

master: "true"

ingest: "true"

data: "true"

remote_cluster_client: "true"

ml: "true"

replicas: 1

minimumMasterNodes: 1

esMajorVersion: ""

image: "es"

imageTag: "latest"

imagePullPolicy: "IfNotPresent"

antiAffinity: "soft"

esJavaOpts: "-Xmx512m -Xms512m"

keystore:

- secretName: s3-creds

volumeClaimTemplate:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "standard"

resources:

requests:

storage: 100MCreate the deployment

$ helm install elasticsearch elastic/elasticsearch -f ./values.yaml

NAME: elasticsearch

LAST DEPLOYED: Fri Feb 25 22:21:42 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Watch all cluster members come up.

$ kubectl get pods --namespace=default -l app=elasticsearch-master -w2. Test cluster health using Helm test.

$ helm --namespace=default test elasticsearch

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

elasticsearch-master-0 1/1 Running 0 105s

$ kubectl exec -it elasticsearch-master-0 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "elasticsearch" out of: elasticsearch, configure-sysctl (init), keystore (init)

elasticsearch@elasticsearch-master-0:~$ bin/elasticsearch-keystore list

keystore.seed

s3.client.default.access_key

s3.client.default.secret_key

Now lets stand up a kibana instance and test

$ helm install kibana elastic/kibana --set imageTag=7.17.0

NAME: kibana

LAST DEPLOYED: Fri Feb 25 22:27:47 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: NoneTime to access kibana and add the repository (this can be done via API calls as well)

Goto devtools and add

PUT _snapshot/minio

{

"type": "s3",

"settings": {

"bucket": "bucket1",

"endpoint": "http://192.168.1.251:9000",

"path_style_access": "true"

}

}and now we can see the repo and verify its communication

Lets create a policy and take a snapshot

And there you have it!

We have this working in helm as well.